It’s become clear that computerized image analysis can be a powerful tool for helping to diagnose some diseases, including diabetic retinopathy and some types of macular degeneration. But one question that remains to be answered is how the artificial intelligence should learn what to look for. One approach is to teach the software to analyze and quantify specific known signs of the disease, much as a human specialist would do. The other approach is to allow the system to determine on its own how to identify healthy vs. diseased eyes by showing it a large number of samples of each. Both approaches have shown significant promise.

The First Approved System

The first-ever AI diagnostic system to obtain FDA approval is the IDx-DR system from IDx (Coralville, Iowa). The IDx-DR is designed to analyze retinal photos captured by the Topcon NW400 camera and detect “more than mild” diabetic retinopathy in adults who have diabetes. The IDx-DR falls into the first category of AI disease detectors: Experts have trained the system to look for specific signs of the disease in order to determine whether the disease is present at a predetermined level of severity. If the images are of sufficient quality, the system gives the operator one of two responses: either “More than mild diabetic retinopathy detected: refer to an eye-care professional,” or “Negative for more than mild diabetic retinopathy; retest in 12 months.” Notably, the device doesn’t need a clinician to interpret the image or result, which allows the device to be used by health-care providers who wouldn’t normally be involved in eye care.

|

| The IDx-DR system identifies individuals with mild or greater diabetic retinopathy or diabetic macular edema, without doctor oversight or an experienced operator. |

The FDA looked at the results of a 900-subject study in which retinal images were evaluated by the system. The system’s conclusions were compared to expert analysis by the University of Wisconsin Fundus Photograph Reading Center. The system correctly identified the presence of greater-than-mild diabetic retinopathy or diabetic macular edema 87.4 percent of the time, and correctly identified those not falling into this category 89.5 percent of the time.

Michael Abramoff, MD, PhD, a retinal specialist and founder and president of IDx, notes that the FDA has specifically authorized the system for use with the Topcon NW400. “Because there’s no physician supervising the use of the device, we wanted to make sure it’s used exactly as it was in the pivotal trial, and we only tested it with the NW400 in the trial,” he explains. “We chose the NW400 because of its ease of use for operators who have never used a retinal camera. Because guidance is provided by the AI, the operator only needs to have a high-school graduate level of education. In fact, the operators in the clinical trial were asked if they’d ever used a retinal camera; if they had, they couldn’t be part of the trial. Currently, we’re about to start additional studies using other cameras.

“The data showed that 96 percent of patients successfully received disease-level evaluation,” he continues. “That means that the system was able to say yes or no regarding the presence of diabetic retinopathy 96 percent of the time. Furthermore, fewer than a quarter of the subjects needed dilation. The reason we were able to achieve these numbers is that the device actually incorporates two AI systems—one makes the diagnosis, while the other helps the inexperienced operator take high-quality images of the correct part of the retina. It tells the operator that he missed an area, or that one part is out of focus, and he needs to retake the picture.”

Asked why the device exclusively detects “more-than-mild” disease, Dr. Abramoff says this was chosen based on the American Academy of Ophthalmology’s Preferred Practice Patterns for Diabetic Retinopathy.

|

| The IDx-DR was able to successfully produce a disease evaluation for 96 percent of subjects in a clinical trial, with fewer than a quarter of them needing dilation. The artificial intelligence built into the device helps ensure that the operator gets high-quality images. |

“The idea was to catch the patients who can’t wait 12 months to be seen,” he says. “The Preferred Practice Pattern says those with more-than-moderate disease or macular edema need to be seen by an ophthalmologist sooner than that. With a different system you could detect those who have a tiny number of microaneurysms—patients who could wait 12 months to be seen—but we chose to detect just those individuals who need to be seen more urgently.”

Dr. Abramoff says that several concerns guided the development of the system. One key concern was creating a system that could make a clinical decision by itself, with no physician oversight. “We care about maintaining both health care affordability and high quality, and this was the best way to do that,” he says. “However, autonomous AI requires much stricter performance standards than something that simply helps a specialist like me to make a clinical decision. In those situations, I’m responsible. Here, I’m relying on the IDx-DR output.

“A second issue was being able to produce disease-level output for the vast majority of patients,” he continues. “Otherwise, you wouldn’t be able to use the system without specialist oversight. Fortunately, we were able to achieve that. A third issue was that the system had to be as unbiased as possible in terms of race, ethnicity, sex and age. Removing potential bias impacts both how you design the AI and also how you validate it. Our detectors identify biomarkers, regardless of these factors.”

Making It Explainable

Dr. Abramoff says another important issue is one that separates this technology from other “machine-learning” systems. “We wanted our system to be explainable,” he says. “One reason for this is psychological. It’s very important to be able to explain how the system reaches a conclusion, even when it makes a mistake. For centuries doctors have known to look for hemorrhages and exudates and microaneurysms and other lesions, because if the eye has those lesions, the patient has diabetic retinopathy. So we decided to build detectors for each of these lesion types, and then combine those outputs into a single disease output.

“Using this approach makes the system explainable,” he continues. “We can validate each of these detectors independently, and we were able to show the FDA how the system works. We can point to an image and say the device detected hemorrhages and exudates here, and therefore it reported the presence of disease. And if the detector doesn’t work properly in some situation, we’ll know why it didn’t.

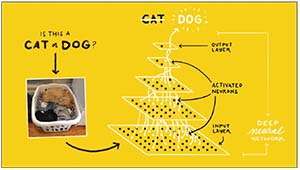

“Some other groups use the ‘black box’ approach,” he notes. “That typically involves a single convolutional neural network. They say, ‘Here are a bunch of images; here’s the disease output you ought to have for each image.’ They don’t know how the system makes its decision. It could be because in the image the disc is in a certain location, and in the training data, people with disease mostly had the disc in that location. In some cases these systems have factored in part of the image that’s outside of the retina, even including the square border around the image of the retina, e.g., the mask. Proponents of this approach don’t care what part of the image was used to make the decision, as long as it makes the right decision. That’s not explainable, because they can’t tell you how it works.

|

| Rather than teaching the AI system what to look for, systems using “deep learning” are exposed to many labeled images, allowing the system to gradually determine by itself what image characteristics relate to that label. Data received at the “input layer” are processed by a system of weighted connections developed based on the system’s past experience, leading to a conclusion presented at the “output layer.” This process can be used to identify the presence of disease based on image characteristics. |

“If I give clinicians an image with a bunch of hemorrhages,” he continues, “they’ll say, ‘This is likely diabetic retinopathy.’ If I start taking those hemorrhages away, eventually they’ll say, ‘There’s no disease here.’ Biomarker AI systems like ours work similarly. On the other hand, using the ‘black box’ approach, you can change less than 0.1 percent of the image, so it looks exactly the same to any human clinician—and the system may flip its diagnosis. Those algorithms are highly sensitive to specific alterations of pixels, and not necessarily sensitive to hemorrhages and microaneurysms. That’s a concern because we don’t know what these systems are looking for, or if they fail, why they did. We call it catastrophic failure, because it’s very unexpected. For example, an image might look very abnormal, full of disease, but the system says it’s normal.”

Machine, Teach Thyself

Google is one of a number of companies developing a diagnostic system using the other machine-learning approach. “Many different companies are working on different diseases such as glaucoma and macular degeneration where there’s a very large global need for screening,” says Peter A. Karth, MD, a vitreoretinal specialist at Oregon Eye Consultants and a physician consultant to Google. “Google continues to be the research leader in this space. Google’s goal is to create a system that will set the gold standard for accuracy with the ability to parse out all levels of diabetic retinopathy—including diabetic macular edema—with a very economical cost structure. They’re getting closer to a system that will be really effective when deployed.”

Dr. Karth says the approval of IDx-DR is an important milestone. “Of course, unlike Google’s system, IDx-DR is a feature-recognition-based system that teaches the machine to look for certain things, such as bad blood vessels, microaneurysms and hemorrhages,” he notes. “Most of the other companies, including Google, are using systems based on machine learning. In machine learning you don’t teach the machine anything; it learns on its own.”

Asked whether he’s concerned that doctors won’t know what the system is basing its diagnosis on, Dr. Karth says he’s not worried. “The system is definitely picking up on things that we’re not, but I don’t think it’s necessary to know what those things are in order to have a robust algorithm,” he says. “It’s possible that surgeons could benefit from learning what those factors are, and there are teams trying to parse that out, but the results aren’t ready for release yet.”

So which approach (machine learning vs. feature recognition) is likely to be most effective? “There are good arguments on both sides,” notes Dr. Karth. “The IDx people have good data supporting their approach. But with all of the research and people working on using machine learning to allow in-depth grading of diabetic retinopathy and diagnose multiple diseases, I believe this will be the wave of the future. At this point, of course, it’s impossible to say whether one technology or the other will become the gold standard.”

Asked about the nature of the evidence supporting these technologies, Dr. Karth acknowledges that much of the evidence comes from retrospective studies. “Prospective studies are clearly necessary to test the validity of treatment-related advances, but for a lot of imaging technology a prospective study doesn’t matter so much,” he says. “Whether the images were taken today or three years ago makes little difference. An image can be presented to an algorithm at any time; it doesn’t matter whether it’s old or new. It’s true that the systems we’re working on have not been thoroughly tested prospectively so far, but that’s just a punctuation that will have to be done before they’re approved.”

Pros and Cons

Other researchers in the field note that both approaches to “educating” an AI system to detect disease have merit. Among them is Ursula Schmidt-Erfurth, MD, a professor and chair of the Department of Ophthalmology at the University Eye Hospital in Vienna, Austria, and an adjunct professor of ophthalmology at Northwestern University in Chicago, who is known for her work with artificial intelligence. (She founded the

OPTIMA project, an interdisciplinary laboratory including computer scientists, physicists and retina experts introducing artificial intelligence into ophthalmic image analysis, in 2013.) Professor Schmidt-Erfurth’s team has conducted a series of studies using artificial intelligence to analyze OCT images to try to predict clinically relevant issues such as the optimal anti-VEGF injection interval when using the treat-and-extend approach to manage neovascular macular degeneration patients. As part of that effort, her team has developed a fully automated AI system that detects, localizes and quantifies macular fluids in conventional OCT images.

Professor Schmidt-Erfurth sees advantages to both ways of using artificial intelligence as an analytical tool. “It makes a lot of sense to train an AI-based algorithm to identify specific characteristics if you have a defined task such as screening for the clinically specified signs of diabetic retinopathy,” she says. “In other words, there is a clear diagnosis, and you verify the diagnosis by looking for the presence of prespecified markers such as microaneurysms, intraretinal microvascular abnormalities and hemorrhages. This is the typical clinical

task in ophthalmology and [when used in AI] it’s referred to as classic supervised machine learning.

“It’s a different strategy to let an algorithm search for any kind of anomaly from a large sample of normal and diseased cases,” she continues. “That’s referred to as unsupervised machine learning, or deep learning. The advantage of this approach is that it’s an unbiased search and can find a lot of relevant morphological features. Of course, one then has to correlate these previously unknown features with function or prognosis to make sense out of them.”

So: What’s Next?

Dr. Karth says it’s hard to predict what will happen next. “I can say that the companies I work with are putting a lot of resources into creating really good algorithms, as well as determining how to package them correctly and get them to the consumer,” he says. “I don’t know of any date for release of the Google system, and honestly, I don’t think they’re in a hurry. In my opinion, the system is good enough to start releasing right now, but the company is thinking the whole thing through carefully. In general, I haven’t heard of many companies rushing to get FDA approval. At this point, rushing things poses a lot of risks.”

Dr. Karth says he’s aware that some ophthalmologists are unsure that artificial intelligence helping to diagnose disease is a good thing. “I believe these systems will be positive for ophthalmology,” he says. “They’re going to reduce blindness, and they’ll increase the number of patients with disease that go to see a doctor. I hear some doctors ask, ‘What happens when a patient has a retinal detachment or a tumor and instead of going to the doctor he goes to a kiosk? It may miss a peripheral retinal detachment or a tumor; it will just tell the person that he doesn’t have diabetic retinopathy.’ That’s a very common type of argument against this type of technology, and it’s certainly true that every patient should have a complete exam. But the AAO doesn’t recommend a yearly screening of the population for retinal detachment, or a tumor, or any number of conditions. They only recommend it for diabetic retinopathy. So yes, the device might fail to detect a tumor in the periphery of the eye; but we know it doesn’t make sense to screen everyone in the world for an eye tumor.”

In the meantime, now that the IDx-DR system has been approved in the United States, it will undoubtedly start appearing across the country soon. Although it’s designed to be useable by people who are not ophthalmologists, Dr. Abramoff says that ophthalmologists are also very interested. “We’re being contacted by a lot of potential customers, including ophthalmologists and retina specialists,” he says. “I think it’s exciting for almost everyone, because from a clinician’s point of view it will bring in a patient population with more disease. The people who have disease will be detected, so there will be more potential for pre-venting vision loss and blindness.”

“People are sometimes resistant to new technology, but this technology will reduce the barriers to access to care and screening, and it will help reduce blindness,” Dr. Karth concludes. “If this is used and embraced by ophthalmologists, I think it could have a huge positive impact all over the world.” REVIEW