The cornea subspecialty enjoys a variety of complementary technologies that rely on artificial intelligence to process vast amounts of generated data and aid clinical decision-making. In fact, AI algorithms for early keratoconus detection have been around since the development of computerized corneal topography in the late 1980s and early 1990s.

“The algorithms are widespread and performing well,” says Bernardo T. Lopes, MD, MPhil, PhD, MRCS, FICO, of the University of Liverpool School of Engineering in the United Kingdom, the department of ophthalmology at Federal University of São Paulo and member of the Rio de Janeiro Corneal Tomography & Biomechanics Study Group. “As with everything in life, we’re always seeking to improve what we have or coming back to already established machine learning algorithms to implement them into commercially available devices or websites. Algorithms and AI databases are supporting ophthalmologists around the world.”

In this article, AI developers and experts share how AI is being used for corneal conditions, offer tips for working alongside algorithms and discuss future directions.

The State of Corneal AI

Also in this Issue... DSO and Cultured Endothelial Cell Transplants: A Review by Thomas John, MD, and Anny M.S. Cheng, MD Premium IOLs in Patients with Corneal Conditions by Asim Piracha, MD Diagnosis & Management of Blepharitis by Charles Bouchard, MD, MA |

There are many types of AI processes used in cornea today. “Virtually any AI process can be involved in diagnosis, grading or treatment,” Dr. Lopes says. “Some AI processes include algorithms using different neural network architectures for vector machine decision trees or random forests, with different degrees of success (see sidebar for terms). We use these not only for screening but also for corneal surgeries. We have algorithms that were designed to predict visual outcomes after refractive surgery and to optimize intracorneal ring implantation and limbal relaxing incisions.”

Much of the buzz in the news around AI innovation in recent years has been centered on the advent of two autonomous AI systems, IDx-DR and EyeArt, for large-scale diabetic retinopathy screening. AI experts say it’s possible but less likely that corneal AI will follow this autonomous path. “Before the AI era, we had already been doing a lot of screening for retinal conditions such as DR, glaucoma suspects, AMD and myopia, so adding AI just made sense,” explains AI expert Daniel Shu Wei Ting, MD, PhD, of the Singapore Eye Research Institute at the Singapore National Eye Centre. “Having said that, compared with retinal conditions, there aren’t as many corneal conditions that require repeated screening, especially at a population level. Corneal conditions usually require specialist evaluations. Based on horizon scanning of the market size for ophthalmology, corneal diseases make up less than retinal diseases, so most R&D funding is going toward the posterior segment.”

One goal that AI developers share is what Dr. Ting refers to as the “democratization of expertise.” “There’s a lack of corneal specialists in many parts of the world,” he says. “If we build AI algorithms around the top experts, package and embed them into different laser and refractive technology, that would be a powerful way of making refractive surgery really safe for many more patients. Developing better segmentation algorithms, for example, will lead to better diagnoses and detection of abnormal areas in the scan.

“Machines are getting smarter,” he continues. “We need to ask ourselves: How do we use AI and data to make things simple yet safe? This is a major trend in health care overall.” Dr. Ting is currently involved with several industry and imaging companies looking in this direction.

As an editorial member of several peer-reviewed journals, many corneal AI projects come across his desk. “Most of the projects in corneal diagnosis are focused on the classification domain,” he says. “Building a classifier involves datasets and a yes/no response for presence of disease. I also see many segmentation algorithms for corneal layers, which will aid in planning operations as well as postop surveillance—how’s the graft doing? Are there signs of early rejection, inflammation or infection?”

Keratoconus Detection

Artificial Intelligence TermsHere are some brief descriptions of AI processes mentioned in this article:17 Feedforward neural network (FNN) is a type of AI network that processes information in one direction. Convolutional neural network (CNN) is a deep learning algorithm used primarily to analyze images. The algorithm takes an input image, assigns importance to certain aspects of the image and then differentiates one image from another. Support vector machine learning (SVM) is a type of supervised learning model that analyzes data for classification and regression analysis. Using “hyperplanes,” or decision boundaries, the AI classifies data points. Support vectors are the data points closer to the hyperplane. They influence the hyperplane’s position and orientation, and define the margins of a classifier. Automated decision-tree classification is a type of predictive modeling that moves from observations to conclusions to classify a variable. These algorithms use if/else questions to arrive at a decision. Random forests or decision forest models (DFM) use multiple individual decision trees that operate simultaneously to classify data. Each decision tree produces a class prediction and the class prediction occurring most often is the random forest’s prediction. Multiple trees protect against individual errors. Random survival forests (RSF) are a type of random forests method usually used in risk prediction. |

The progressive nature of ectasia makes early detection key.1 “We don’t need an AI algorithm to tell us whether a patient has frank keratoconus,” says corneal specialist Jodhbir S. Mehta, MBBS, FRCOphth, FRCS(Ed), PhD, of the Singapore Eye Research Institute. “Instead, we want these algorithms to differentiate cases of subclinical keratoconus from normal eyes. This is often a gray area.”

“AI can help us differentiate subclinical cases because it makes sense of subtle signs that may not otherwise be highlighted in the amount of data we collect,” Dr. Lopes says. “When you analyze this information with artificial intelligence, you build the whole picture.”

Diagnostic imaging usually includes corneal topography with Placido disc-based imaging systems, 3-D tomographic or Scheimpflug imaging and AS-OCT.2 AI algorithms integrate data from these systems to differentiate cases of keratoconus and forme fruste keratoconus from normal eyes, using AI approaches such as feedforward neural networks, convolutional neural networks, support vector machine learning and automated decision-tree classification of corneal shape.2 All of these algorithms are highly precise for keratoconus detection, experts say, with accuracy, sensitivity and specificity rates ranging from 92 to 97 percent.2 Machine learning has been shown to improve imaging devices’ ability to diagnose subclinical disease.3

Subclinical differentiation remains challenging, however, despite the fact that AI algorithms have made great leaps. One study conducted in 2017, with no company ties, examined the diagnostic ability of three Scheimpflug devices—Pentacam (Oculus); Galilei (Ziemer); and Sirius (Costruzione Strumenti Oftalmici, Florence, Italy)—in differentiating normal and ectatic corneas.4 Direct comparison wasn’t possible since each machine uses different indices for keratoconus screening. All three devices were effective for differentiating keratonic eyes from normal eyes, but the researchers noted that the cutoff values provided by earlier studies and by manufacturers aren’t adequate for differentiating subclinical cases from normal corneas.

The study included 42 normal eyes, 37 subclinical keratoconic eyes and 51 keratoconic eyes. A keratoconus diagnosis sensitivity of 100 percent was observed in six parameters on Pentacam and one parameter on Galilei. For subclinical keratoconus, 100-percent sensitivity was observed for two Pentacam parameters. All parameters were strong enough to differentiate keratoconus (AUC>0.9). The authors found that the AUC of the Belin/Ambrosio enhanced ectasia total derivation and the inferior-superior value of Pentacam were statistically similar to the Galilei’s keratoconus prediction index and keratoconus probability (Kprob) (p=0.27), and to the Sirius’ 4.5-mm root mean square per unit area (RMS/A) back (p=0.55). For subclinical differentiation, BAD-D was similar to the Galilei’s surface regularity index (p=0.78) and significantly greater than the Sirius’ 8-mm RMS/A (p=0.002).

In 2018, an unsupervised machine learning algorithm developed by Siamak Yousefi, PhD, and colleagues, labeled a small number of normal eyes as having mild keratoconus.5 The researchers hypothesized that these eyes may have had FFKC. The algorithm was trained on 12,242 SS-OCT images and analyzed 420 principal corneal components. A total of 3,156 eyes with Ectasia Status Indices of zero to 100 percent were analyzed in the study. The algorithm had a specificity of 97.4 percent and a sensitivity of 96.3 percent for differentiating keratoconus from healthy eyes.

|

|

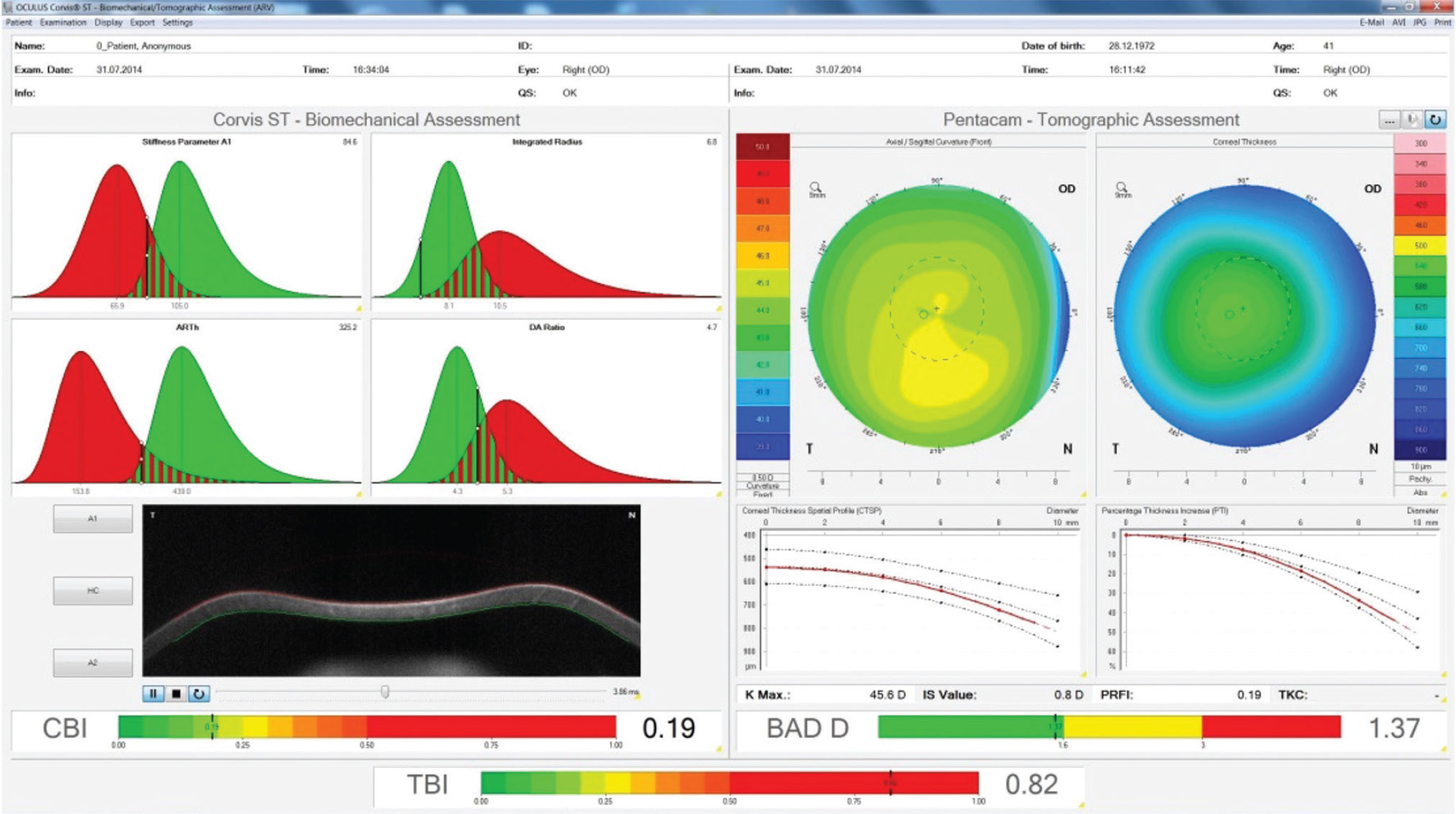

This is the contralateral unoperated right eye of a patient who developed post-LASIK ectasia in the left eye. You can see that the while the individual tomographic and biomechanical indices are normal (BAD-D and CBI), the evaluation of them in combination using AI shows the high risk of this case. (Image courtesy of Bernardo T. Lopes, MD) |

A 2020 study identified a possible predictive variable for subclinical disease differentiation. The study described an automated classification system using a machine learning classifier to distinguish clinically unaffected eyes in patients with keratoconus from normal control eyes.6 A total of 121 eyes were classified by two corneal experts into normal (n=50), keratoconic (n=38) and subclinical keratoconus (n=33). All eyes underwent Scheimpflug and ultra-high-resolution OCT imaging, and a classification model was built using all features obtained on imaging. Using this classification model, the algorithm was able to differentiate between normal and subclinical keratoconic eyes with an AUC of 0.93. The researchers pointed out that variation in thickness profile of the corneal epithelium, as seen on UHR-OCT, was the strongest variable for differentiating subclinical keratoconic from normal eyes.

A review of machine learning’s accuracy in assisting in detection of keratoconus, published in the Journal of Clinical Medicine this year, reported that machine learning has the potential to improve diagnosis efficiency but “Presently, machine learning models performed poorly in identifying early keratoconus from control eyes and many of these research studies didn’t follow established reporting standards, thus resulting in the failure of clinical translation of these machine learning models.” The authors suggested this is due in part to a lack of large datasets and differences between corneal imaging systems.7

Experts point out that while the AI algorithms in your diagnostic imaging devices are powerful and consider a host of variables, it’s still a good idea to consider the raw data and confirm the AI’s analysis with other diagnostic testing. AI still isn’t a replacement for a physician’s judgment, they say.

Refractive Surgery

Uncorrected refractive error is a leading cause of decreased vision around the world. Experts say that AI-based refractive surgery screening will play an important role as more patients seek refractive surgery. Current data shows that AI-based screening models are effective at identifying good surgical candidates.8 “I use AI the most for refractive surgery screening,” says Dr. Lopes. “It’s useful for when you have a challenging case and you’re in doubt as to whether the cornea would tolerate LASIK surgery.”

Tyler Hyungtaek Rim, MD, MBA, a clinical scientist at the Singapore Eye Research Institute, and colleagues developed a machine learning clinical-decision support architecture to determine a patient’s suitability for refractive surgery.9 Five algorithms were trained on multi-instrument preoperative data and the clinical decisions of highly experienced experts for 10,561 subjects. The machine learning model had an accuracy of 93.4 percent for LASIK and SMILE. The model (ensemble classifier) with the highest prediction performance had an AUC of 0.983 (95% CI, 0.977 to 0.987) and 0.972 (95% CI, 0.967 to 0.976) on the internal (n=2,640) and external (n=5,279) validation sets, respectively.

The researchers reported that the machine learning models performed statistically better than classic methods such as the percentage of tissue ablated and the Randleman Ectasia Score. They noted that machine learning algorithms that use a wide range of preoperative data can achieve results comparable to physician screening and can serve as safe and reliable clinical decision-making support for refractive surgery.

AI algorithms are also being used to predict refractive surgery outcomes, including risk of post-LASIK ectasia.1 So far, the literature suggests that AI performs similarly to experienced surgeons in terms of safety, efficacy and predictability.

A decision forest model created from feature vectors extracted from 17,592 cases and 38 clinical parameters from patients who underwent LASIK or PRK surgeries at a single center effectively assessed risk with high correlation between actual and predicted outcomes (p<0.001).10 The researchers reported efficacy (the ratio of preop CDVA and postop UDVA) of 0.7 or greater and 0.8 or greater in 92 percent and 84.9 percent of eyes, respectively. Efficacy less than 0.4 and less than 0.5 was achieved in 1.8 percent and 2.9 percent of eyes, respectively.

Interestingly, they noted that eyes in the low efficacy group had statistically significant differences compared with the high efficacy group but were clinically similar. For example, patients in the lower efficacy group were somewhat older, had smaller scotopic pupil size and lower treatment parameters for sphere and cylinder. Preoperative subjective CDVA was the most important variable in the model. The researchers also reported that correlations analysis showed significantly decreased efficacy with increased age, central corneal thickness, mean keratometry and preoperative CDVA (all p<0.001); and increased efficacy with pupil size (all p<0.001).

Blockchain Technology for AI Evaluation You’ve probably heard about blockchain in the context of cryptocurrencies like Bitcoin. Blockchain is a decentralized, immutable ledger that records digital assets with encryption technology. Each “block” is timestamped, providing proof that a data transaction occurred when a block was published. Each block contains information about the previous block. They’re chained together and can’t be altered retroactively. How does this relate to AI technology? “There’s a term called privacy preserving technologies or PPT, which helps to mitigate data-sharing problems,” says Daniel Shu Wei Ting, MD, PhD, of the Singapore Eye Research Institute at the Singapore National Eye Centre. “Different countries have different data sharing and data privacy rules. The United States, the United Kingdom, the EU and Asian-Pacific countries all have different sets of rules. How can you facilitate cross-border collaborations without sharing data? How do you share without needing it to be physically transferred from one country to another? “This is where blockchain comes into play,” he says. “Blockchain is secure and it’s an immutable platform. Once something is published on it, it can’t be changed. If there’s an update, there’s always a trail to show the order in which something was changed. “We’ve piloted a project examining how we can use a blockchain platform to govern the AI testing process,” Dr. Ting continues. “I receive a lot of AI papers for peer-review, and one of the challenges I often face, especially when I’m reviewing articles as an editor, is that I don’t know how true the result is. The studies all report something like an AUC of 98 percent or a sensitivity more than 95 percent, and everyone claims to have the best software. How do you actually appraise these studies? How do you test the algorithms? Some journals such as The Lancet or Nature have a data availability statement or an AI algorithms availability statement. So, if you request the data, the researchers will send it to you. “I tried this once and reached out to the researchers,” he says. “I said, ‘I’d like to test the algorithm’s reproducibility against what was submitted to be published.’ I ran into problems with data privacy rules. Researchers would tell me, ‘I can’t send you the data because my tech transfer office told me it’s against such-and-such rule’ or the AI is licensed. So your hands are tied: Do you trust the people or reject the paper? These are things that are happening with many AI papers right now.” He says that using a blockchain ecosystem could help create a trusted environment. “It’s a bit like an audit for your taxes,” he explains. “You may submit your tax return and everything’s fine and no one bothers you. But when something unusual happens, we need to investigate. If an AI algorithm gets FDA approval, and then hits its implementation space and the AUC is still consistently showing something like 96 or 97 percent, then no problem. No one’s going to open up the black box of your algorithm and look. But if the paper submitted claims a 98 percent AUC and when it hits its real-world implementation its performance is significant lower, we want to know why so we can fix it. It might be an honest mistake, but we need to see what was done in the past. “To do this, we would go back to the original algorithm and the data sets used, but if you go back three or four years, many researchers aren’t able to provide you with the same dataset or the same algorithm because there have been new versions and updates but no permanent records of past changes,” he says. “We can use blockchain to help correct problems that might arise,” he says. “If you submit an AI to the FDA, a hash value comes with your algorithms and datasets. If I do an audit of the algorithm and dataset four years later, it’ll match the original hash values and we can see that maybe it was just bad luck and fix the problem.” |

AI-based cataract diagnosis and severity grading using slit-lamp photography and fundus photos have also demonstrated success with high accuracy, sensitivity and specificity.1 One group created a validated deep-learning model that could differentiate cataract and IOL from a normal lens (AUC>0.99) and detect referable, grades III-IV cataract (AUC>0.91), subcapsular cataract and PCO.10

Corneal Ulcers

AI has strong potential for use in diagnosing corneal ulcers and dystrophies because these conditions are easy to image with photography or other diagnostics. “The most common cause of corneal ulcers around the world is bacteria, followed by viruses, and in certain rural regions and countries such as India and China you see high levels of fungal keratitis,” says Dr. Mehta. “We’re training AI algorithms to differentiate these conditions.”

Dr. Mehta was involved in a study of corneal ulcers that demonstrates the potential for AI’s democratization of expertise. “We were involved in a study where ophthalmologists around the world were randomly shown a series of patient photographs from India that were culture-positive for either bacterial or fungal keratitis,” he says. “We measured diagnostic accuracy and then compared the results of the surgeons based in India with those based outside of India, such in the United States, United Kingdom, Europe and other Asian countries. Basically, we were able to show that when you look at it as a cohort, the surgeons who were best able to pick up fungal keratitis were, unsurprisingly, the surgeons who saw the most cases of fungal keratitis—the doctors in India. The AI software, based only on a slit-lamp imaging protocol, was superior to all of the doctors.

“So, what this shows is that diagnostic accuracy for doctors not working in areas with high levels of fungal keratitis is lower,” he continues. “In the U.K., we probably see only a few fungal keratitis cases each year. In Singapore, we see many more cases of bacterial infection, even though we’re in a tropical climate zone.

“This type of AI software will help improve diagnostic accuracy,” Dr. Mehta says. “Bacterial samples can take days to culture, and fungal samples often take weeks before we get a response. Of course, you have to treat the patient in the meantime. Typically, we treat patients empirically, but with the software, we’re able to get an idea of diagnostic accuracy sooner. This could help many patients, especially when there isn’t access to a laboratory.”

Dr. Mehta says artificial neural networks (ANN) have the potential to turn around results faster and more accurately than traditional diagnostic methods, which involve corneal scraping, microscopy, staining and culturing, and have a culture positivity rate of only 33 to 80 percent.11 One ANN was able to classify 39 out of 43 bacterial and fungal ulcers correctly with an accuracy of 90.7 percent, compared with a clinician rate of 62.8 percent (p<0.01), and a specificity of 76.5 percent and 100 percent for bacterial and fungal ulcers, respectively.12

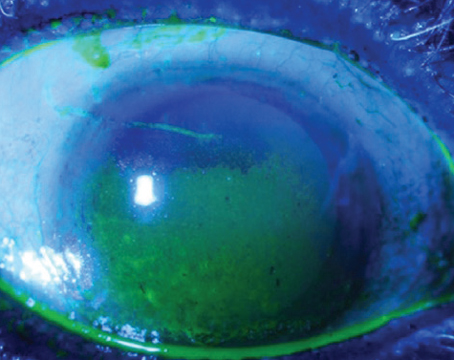

|

A 2020 report in Nature described a novel deep learning algorithm for slit-lamp photography that had high sensitivity and specificity for detecting four common corneal diseases: infectious keratitis; non-infectious keratitis; corneal dystrophy or degeneration; and corneal neoplasm.13 The algorithm was trained to detect fine-grained variability of disease features on 5,325 ocular surface images from a retrospective dataset. It was tested against 10 ophthalmologists in a prospective dataset of 510 outpatients. The AUC for each disease was more than 0.91, and sensitivity and specificity were similar to or better than the average values for all the ophthalmologists. The researchers noted that there were similarities in misclassification between the human experts and the algorithm. Additionally, they cited a need for improvement to overcome variations in images taken by different systems, but concluded that this algorithm may be useful for computer-assisted corneal disease diagnosis.

One of the challenges that AI will need to overcome in corneal infection diagnosis is concomitant disease. “About 40 to 50 percent of cases are mixed infections, and that’s going to be much more challenging for us to program and for an AI to pick up,” says Dr. Mehta. “How do you differentiate a viral infection on top of a bacterial infection, or a bacterial infection on top of a viral infection, or polymicrobial bacteria and fungus? Right now, the software also won’t give you an idea about resistance, though it could provide some guidance on antibiotics or other treatments. Understanding the dynamics of how this will affect treatment will be challenging. To do this, we’ll need very large datasets to examine response rates to drugs.”

He says that AI may be advantageous for analyzing in vivo confocal microscopy images. “Confocal microscopy machines are fantastic, but they produce a lot of images and data,” he says. “Analyzing and screening these images is something that can be done using AI software. The software could pick up Acanthamoeboid cysts or hyphae filaments.

“An additional challenge is that confocal pictures of infection are often very light-colored, so you need to understand the granularity of the software to be able to pick up good images and areas of interest from a background that’s very white or inflamed or scarred,” he explains. “Sometimes the software will miss things, so again, big datasets are needed to refine training algorithms and perform validation.”

“An AI algorithm is only as good as the data it’s trained with,” Dr. Lopes agrees. “The main problem we have now is the size of the datasets used to train algorithms. You’ll see papers published with as few as 30 days of training to perform a complex task, such as combining topography and tomography to detect ectasia. That will almost certainly overfit the training data and won’t be able to perform as well.

“Another challenge lies in the very nature of corneal data,” Dr. Lopes continues. “Like most biological data, it’s noisy. There are random fluctuations that interfere with the signal effect or the feature or pattern you’re trying to detect. If you measure the same eye twice, you won’t have the same outcome because of these random fluctuations. They can be as high or as important to mask an actual feature you’re trying to detect. So, dealing with noise is a challenge for algorithms to handle, and it’s hard to get big samples to correct for this.”

Corneal Transplants

One of the well-known difficulties with DMEK is getting the graft to adhere to the endothelium with as little manipulation as possible. “There’s some work using AI software and machine learning models that’s trying to understand which grafts will stick and which ones won’t, and the behavior of grafts—whether or not they need to go back for rebubbling after basic surgeries,” says Dr. Mehta.

A study presented at ASCRS in 2020 reported successful graft rejection diagnosis with a novel autonomous AI algorithm.14 A total of 36 eyes with corneal grafts were imaged using AS-OCT, and a deep learning AI algorithm from Bascom Palmer was used to evaluate the graft scans. The researchers compared the AI’s results to clinical diagnoses of Bascom Palmer corneal experts. The corneal experts diagnosed 22 grafts as healthy and rejected 14 of them. The AI algorithm correctly diagnosed all healthy grafts and 12 of 14 rejected grafts. For rejection diagnosis, the AUC was 0.9231 with a sensitivity of 84.62 percent and specificity of 100 percent.

Another study published in Cornea reported positive early results with a deep-learning-based method to automatically detect graft detachment after DMEK.15 The researchers trained an algorithm on 1,172 AS-OCT images (609 attached, 563 detached) to create a classifier. The mean graft detachment score was 0.88 ±0.2 in the detached group and 0.08 ±0.13 in the attached graft group (p<0.001). Sensitivity was 98 percent, specificity was 94 percent and accuracy was 96 percent. The authors say further work is needed to include the size and position of the graft detachment in the algorithm.

|

The Singapore National Eye Centre published a paper in June of this year using machine learning to analyze factors associated with 10-year graft survival in Asian eyes.16 The algorithm included donor characteristics, clinical outcomes and complications from 1,335 patients who underwent DSAEK (n=946) or PK (n=389) for Fuchs’ dystrophy or bullous keratopathy. The researchers used random survival forests analysis to determine the optimal Cox proportional hazards regression model. They found that male sex (HR: 1.75, 95% CI: 1.31 to 2.34; p<0.001) and poor preoperative visual acuity (HR: 1.60, 95% CI: 1.15 to 2.22, p=0.005) were associated with graft failure.

“AI is also being developed for monitoring patients after transplants,” Dr.

Mehta says. “We look at the endothelial cell count to get an idea of how healthy the cells are, and this gives us a surrogate marker of how long the graft will survive. There are now several tools available with software to perform cell counting. The problem is that sometimes imaging can be poor, and you need really good-quality images, or you won’t have a true idea of how the graft is functioning. AI software may help to provide better cell data, even if the image quality is poor. However, this has all been done in a research setting so far, not in a clinical setting, so how useful this AI tool will be for monitoring post-corneal transplant patients remains to be seen.”

Dr. Mehta says advances in our understanding of the genetics of Fuchs’ dystrophy may aid AI-customized transplant procedure planning. “We have much more genetic information on the disease now, and I think there’s an opportunity to link imaging to genetics,” he says. “From a surgical standpoint, we’re working toward approaches that don’t require removal of the whole endothelium, but rather just in a specific area around the disease pathology. AI is helping us diagnose and understand who will be a good candidate for a procedure such as DSO. An algorithm could guide you with respect to how much tissue to strip and where the diseased area is, specifically. We never imaged the endothelium much before because we were just stripping the whole thing off.”

Buy-in

Many of these newer algorithms need a lot of work and validation on larger datasets before they’re ready for clinical prime time. Dr. Ting says an additional obstacle they may face is acceptance by corneal specialists. “Speaking with corneal specialists before COVID, many didn’t seem to think that AI can do a better job than a Gram stain or current culture sensitivities, so a few years ago I would have said that the buy-in from corneal specialists may take some work,” says Dr. Ting. “But as you know, technology is getting smarter, and the aging population has resulted in a shortage of medical expertise, and then we had a pandemic, so there’s a lot that’s changed in people’s mindsets in the past two years. There’s been a digital transformation, and now there’s more acceptance of virtual diagnoses and telehealth. These may revolutionize the way corneal diagnoses are made.”

What’s most important for a clinician to keep in mind when using a tool that has AI? “It’s not perfect,” says Dr. Mehta. “There’s no software that I’ve seen with an AUC of 0.9999, so there will always be an error rate. Some people are worried about being replaced by AI. In radiology, for example, AI can pick up things from CT scans and the like, and AI may be better at that than humans. But will it replace the doctor making clinical decisions? I don’t think so.

“Consider all the machines we have now for tomography or biomechanics,” he continues. “Those systems help you make a better decision for your patient and improve their outcome. I think that’s the role of AI—to help the clinician make the best decision, not to dominate the process. The ultimate decision will be with the surgeon. That’s a responsibility that’s never going to go away. You won’t say, ‘Oh, but the AI software told me to do such and such.’ That’s not going to happen. There are limitations to using AI, and it’s important to understand that it’s a tool to guide you with as much knowledge as possible.”

The Future

“In the future, but probably not in the very near future, we may have completely automated diagnostic systems for different corneal conditions, like in other areas of medicine,” says Dr. Lopes. “Refractive screening is probably closest to this. We already have similar tools for retinal disease screening.

“The main challenge of reaching this future is data,” he says. “You need hundreds of thousands of cases to train an algorithm well. We need more centralized data centers with good-quality information if we want proper automated diagnostics or more advanced surgical planning tools. But AI has been around for more than 30 years now, and there’s high acceptance of current tools. I’m optimistic about the future.”

Dr. Mehta has no financial ties to AI-related technology. Dr. Ting is the co-inventor of a deep learning system for retinal disease. Dr. Lopes is a consultant for Oculus.

1. Lopes BT, Eliasy A, Ambrosio Jr. R. Artificial intelligence in corneal diagnosis: Where are we? Curr Ophthalmol Reports 2019;7:204-211.

2. Ting DSJ, Foo V, Yang L, et al. Artificial intelligence for anterior segment diseases: Emerging applications in ophthalmology. Br J Ophthalmol 2021;105:158-168.

3. Shi C, Wang M, Zhu T, et al. Machine learning helps improve diagnostic ability of subclinical keraotocnus using Scheimpflug and OCT imaging modalities. Eye Vis 2020;7:48. [Epub September 10, 2020]. Accessed June 3, 2022. https://eandv.biomedcentral.com/track/pdf/10.1186/s40662-020-00213-3.pdf.

4. Shetty R, Rao H, Khamar P, et al. Keratoconus screening indices and their diagnostic ability to distinguish normal from ectatic corneas. Am J Ophthalmol 2017;181:140-148.

5. Yousefi S, Yousefi E, Takahashi H, et al. Keratoconus severity identification using unsupervised machine learning. PLoS One 2018;13:11:e0205998.

Shi C, Wang M, Zhu T, et al. Machine learning helps improve diagnostic ability of subclinical keratoconus using Scheimpflug and OCT imaging modalities. Eye Vis (Lond.) 2020;7:48.

6. Cao K, Verspoor K, Sahebjada S and Baird PN. Accuracy of machine learning assisted detection of keratoconus: A systemic review and meta-analysis. J Clin Med 2022;11:478. [Epub January 18, 2022]. Accessed June 3, 2022. https://www.mdpi.com/2077-0383/11/3/478/pdf.

7. Ruiz Hidalgo I, Rodriguez P, Rozema JJ, et al. Evaluation of a machine-learning classifier for keratoconus detection based on Scheimpflug tomography. Cornea 2016;35:827-32.

8. Yoo T, Ryu I, Lee G, et al. Adopting machine learning to automatically identify candidate patients for corneal refractive surgery. NPJ Digital Medicine 2019;2:50. [Epub June 20, 2019]. Accessed June 3, 2022. https://www.nature.com/articles/s41746-019-0135-8.

9. Achiron A, Gur Z, Aviv U, et al. Predicting refractive surgery outcome: Machine learning approach with big data. J Refract Surg 2017;33:9:592-97.

10. Wu X, Huang Y, Liu Z, et al. Universal artificial intelligence platform for collaborative management of cataracts. Br J Ophthalmol 2019;103:1553-60.

11. Ung L, Bispo PJM, Shanbhag SS, et al. The persistent dilemma of microbial keratitis: Global burden, diagnosis, and antimicrobial resistance. Surv Ophthalmol 2019;64:255-71.

12. Saini JS, Jain AK, Kumar S, et al. Neural network approach to classify infective keratitis. Curr Eye Res 2003;27:111-6.

13. Gu H, Guo Y, Gu L, et al. Deep learning for identifying corneal diseases from ocular surface slit-lamp photographs. Nature Scientific Reports 2020;10:17851. Accessed June 3, 2022. https://www.nature.com/articles/s41598-020-75027-3.pdf.

14. Tolba M, Elsawy A, Eleiwa T, et al. An artificial intelligence (AI) algorithm for the autonomous diagnosis of corneal graft rejection. Presented at ASCRS May 2020 Virtual Meeting.

15. Treder M, Lauermann JL, Alnawaiseh M, et al. Using deep learning in automated detection of graft detachment in Descemet membrane endothelial keratoplasty: A pilot study. Cornea 2019;39:2:157-61.

16. Ang M, He F, Lang S, et al. Machine learning to analyze factors associated with ten-year graft survival of keratoplasty for cornea endothelial disease. Frontiers In Med 2022;9:831352. Accessed June 3, 2022. https://www.frontiersin.org/articles/10.3389/fmed.2022.831352/pdf.

17. Towards Data Science. Accessed June 3, 2022. https://towardsdatascience.com.

|