Artificial intelligence is gaining traction in nearly every field and industry by helping people process data and make decisions. In ophthalmology, experts say it has a bright future and the potential be a valuable physician-assistance tool. “We’re inundated with data and imaging and other diagnostic criteria for our patients, and we need to be able to process all of that information very quickly,” says Rishi P. Singh, MD, of the Center for Ophthalmic Bioinformatics, Cole Eye Institute, Cleveland Clinic, and a professor of ophthalmology at the Lerner College of Medicine in Ohio. “AI can help us make good clinical decisions, increase reliability and improve the quality and safety of our patients’ outcomes and ensure we don’t miss something inadvertently. We aren’t immune from transposition, data or interpretation issues. Anyone can make a mistake, especially in a high-volume practice.”

However, the technology isn’t immune from certain challenges. “For the AI systems to become part of our treatment algorithm, we need to have a sophisticated infrastructure that can accumulate all the information mounted to the cloud and then communicate to the physician and the patient if there’s an alert and the patient needs to see a retina specialist,” explains Anat Loewenstein, MD, director of the ophthalmology division at Tel Aviv Medical Center and associate dean of the Sackler Faculty of Medicine at Tel Aviv University. “Adoption by patients, especially elderly people, is another important potential hurdle. There’s also a lack of reimbursement patterns and regulatory pathways for AI-assisted managed care, and the physician will need to oversee a huge amount of data. This may interrupt patient flow and become problematic.”

Here, experts review the two FDA-approved AIs, and algorithms in development, and discuss the unique challenges AI technology will face before widespread implementation.

|

FDA-approved Devices

There are currently two FDA-approved devices for diabetic eye-disease screening. SriniVas R. Sadda, MD, FARVO, a professor of ophthalmology at the David Geffen School of Medicine at UCLA and the director of Artificial Intelligence & Imaging Research at Doheny Eye Institute, says remote disease screening was a natural entry point for AI technology, since screening systems have many time delays. “Data must be transmitted somewhere, experts must evaluate it and then get information back to the patient, and that patient needs to be scheduled to see a specialist if they have significant disease,” he says. “Every time there’s a delay in the system, the chance the patient doesn’t show up for the next appointment increases because they didn’t get the information. Theoretically, AI can give a patient an answer instantly, to determine whether or not they need to be referred right away or whether they can wait a year. That’s why AI is such a game-changer.”

IDx-DR (Digital Diagnostics) was the first FDA-approved autonomous AI device in any field of medicine. It’s designed to detect diabetic retinopathy and diabetic macular edema. Since its approval in 2018, IDx-DR has been used at Stanford, Johns Hopkins, the Mayo Clinic and in Delaware supermarkets to screen patients for disease. The device is indicated for adults over 22 who haven’t been previously diagnosed with DR. Currently, IDx-DR is available on the Topcon TRC-NW400 digital fundus camera. IDx-DR was validated against clinical outcomes in a 2018 study where it demonstrated 87-percent sensitivity and 90-percent specificity for detecting more-than-mild DR.1 Additionally, the algorithm, which was trained to detect biomarkers, performed well on a diverse population.

EyeArt (Eyenuk) is another autonomous AI that’s FDA-approved for detecting more-than-mild and vision-threatening DR in adults. Like the IDx-DR, EyeArt is approved for patients who are diabetic without any known retinopathy. The cloud-based AI system is compatible with two nonmydriatic fundus cameras, the Canon CR-2 AF and the Canon CR-2 Plus AF. In the clinical trial, EyeArt results were compared to human graders at the Wisconsin Fundus Photograph Reading Center. The pivotal trial achieved 96-percent sensitivity and 88-percent specificity for detecting referral-standard or more-than-mild DR. For vision-threatening DR, EyeArt demonstrated 92-percent sensitivity and 94-percent specificity.2

“Importantly, eyes that had significant or worrisome retinopathy—ETDRS level 43 or higher, for example—were all correctly identified as more than mild,” says Dr. Sadda, one of the trial investigators. His team at UCLA conducted the initial training of the EyeArt algorithm through NEI/NIH grants with Eyenuk.

These autonomous AI-based screening devices do a good job of detecting referable DR, but single-disease expertise is also a limitation. “Diabetic retinopathy is a very complicated disease,” says Dr. Singh. “It has implications with regard to cataract, glaucoma and refractive error, among others. None of these other conditions is addressed by these AI platforms.”

He doesn’t foresee AI completely replacing DR screening in the near future, unless someone develops a device that can assess all of these conditions. “Nevertheless, AI screening will greatly benefit underserved populations,” he says.

Sophia Ying Wang, MD, an assistant professor of ophthalmology and primary investigator with the Ophthalmic Informatics and Artificial Intelligence Group at Stanford University, agrees that remote screening, while imperfect, will be able to identify many more cases of early retinal disease in populations where it might otherwise go undetected. “We may be able to improve ophthalmic outcomes in this way,” she says.

Artificial Intelligence Keywords • Artificial intelligence. Definitions vary, but in general AI consists of systems that seem to mimic some human capabilities such as problem-solving, learning and planning through data analysis and pattern identification. • Machine learning. A subfield of AI that uses computer algorithms to parse, learn from and apply data in order to improve itself and make informed decisions. Machine learning makes your Netflix recommendations possible. It still requires occasional guidance if it turns up an inaccurate prediction. • Deep learning. A subfield of machine learning that uses a layered structure of algorithms to create a neural network. This allows a machine to make decisions independently from humans. The algorithm can detect an inaccurate prediction on its own using its neural network. • Neural networks. Sometimes called artificial neural networks (ANNs), neural networks are computing systems modeled after the human brain and are central to deep learning. They’re made up of node layers, each containing an input layer, a hidden layer(s) and an output layer. Neural networks rely on training data to learn and improve their accuracy. Google’s search algorithm is a type of neural network. —CL |

Natural Language Processing

There are other ways to predict patient outcomes besides analyzing images. Natural language processing is a subfield of artificial intelligence that’s focused on understanding human language as it’s written and spoken, explains Dr. Wang. “My research is particularly focused on using techniques in natural language processing to understand free-text clinical notes in electronic medical records,” she says. “Physicians spend so much time documenting important clinical details in these notes. There’s a wealth of information about patients in clinical notes that isn’t captured elsewhere (such as in billing codes or demographics), and it’s difficult to extract and compute without the aid of natural language processing techniques.

“I believe that with more detailed clinical information about patients, we can build algorithms that have better performance when predicting ophthalmic outcomes, such as glaucoma progression or visual prognosis,” she says. “If we could build better predictive algorithms, effectively a ‘crystal ball’ for the future of the patient, that could be enormously helpful for doctors personalizing their therapies according to the likely prognosis of the patient.”

In a recent paper, Dr. Wang explored different methods of representing clinical free-text ophthalmology notes in electronic health records to build an algorithm that predicts patients’ visual prognosis.3 “Some patients with low vision don’t regain significant vision quickly despite initiation of therapy—like a wet AMD patient receiving anti-VEGF injections who still sees poorly after several months,” she explains. “These patients may benefit from early referral to low-vision rehabilitation services, rather than waiting for their therapy course to be complete.

“If an algorithm could predict that a year later they’d still have poor vision, then perhaps a clinical-decision support system could automatically prompt a referral to low-vision services, rather than having to delay for months waiting to see the results of the therapy before getting a referral,” she continues. “There are many clinical details in the notes that can provide clues to a patient’s prognosis, such as specific exam findings (geographic atrophy, cataract, other comorbidities) and other details. In our work, we’re exploring ways to take that human-readable text and turn it into computer-readable numbers [a method known as word embedding] that could then contribute to algorithms predicting a patient’s prognosis, taking into account all of the special language that ophthalmologists frequently use.”

The deep-learning model trained on domain-specific word embeddings performed better using ophthalmology word embeddings than general word embeddings. These ophthalmology word embeddings are now publicly available for research.

Image Processing

Dr. Wang’s group is also involved in a project for cataract surgical videos, involving “computer vision” or image processing techniques. “The idea is to train an AI algorithm to be able to automatically detect what steps are being performed in a surgical video at any given moment (e.g., use of trypan blue, capsulorhexis, phacoemulsification or anterior vitrectomy). We also want to be able to detect key landmarks of the eye (such as where the limbus or the pupil margin are) and where important instruments are inside the eye (such as where the second instruments or the phacoemulsification tip are).”

She says that recognizing what’s happening in a cataract surgery could be very helpful for many different tasks. “For trainee surgeons, recognizing how long each step is taking or what path the instruments are taking in the eye could prompt automated feedback metrics to help them grow into better surgeons. Or, perhaps one day a computer vision system like this could form the basis of robotic-assisted cataract surgery that could warn surgeons just before they’re about to break the capsule and thus avert complications. There are many potential-use cases.”

|

| EyeArt (Eyenuk) is a cloud-based autonomous AI system that can detect clinically significant macular edema in addition to diabetic retinopathy. |

Other AI in Development

Using AI to obtain better images, helping ophthalmologists process large amounts of data or giving patients the ability to image themselves at home are all on the horizon. Many companies are working on AI technologies in the United States. Here are just a few:

• Self-imaging. Several self-operated OCT systems have shown promising results, says Prof. Loewenstein. She says that home OCT monitoring will contribute to increased accuracy and individualized treatment, as well as reducing time intervals between fluid recurrence and the next treatment.

—Using the time-domain full-field OCT prototype device SELFF-OCT, at least 76 percent of the study population could obtain at least one gradable image.4

—A sparse-sampling OCT prototype device, the MIMO_02, has successfully identified ocular comorbidities of AMD patients.5

—NotalVision’s OCT Analyzer (NOA) is a spectral-domain-based OCT that enabled 90 percent of patients with wet AMD (mean age: 74) to perform successful self-imaging in a study.6,7 “When the interpretation of retinal fluid by human graders was compared to the interpretation of the NOA, the agreement on retinal fluid recurrence was 97 percent and the agreement on absence of retinal fluid was 95 percent,” says Prof. Loewenstein, who is also a consultant to NotalVision. “These studies are exciting because they contribute to the treatment of chronic ocular conditions, which aren’t easily managed and require constant monitoring, placing a burden on patients, their caregivers and clinicians as well.”

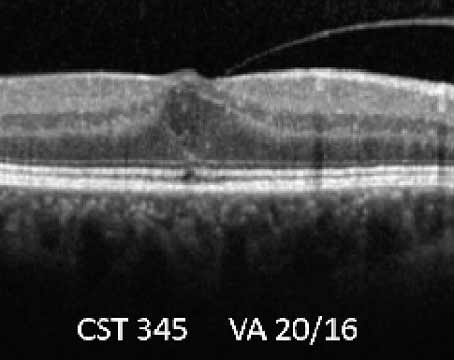

The NOA is an AI software application trained to automatically detect retinal fluid. It quantifies intraretinal and subretinal fluids using cube scans generated from B-scans on OCT (Figure 1). With the cube scans, B-scans are ranked by size of fluid area and en face fluid thickness maps produced by the system. Prof. Loewenstein says the system uses the following steps: (a) An accurate localization of the ILM and RPE areas, (b) fluid identification using standard imaging, (c) machine-learning-based classification of fluid-filled areas and (d) quantification of the retinal fluids (fluid volume).

|

| Figure 1. Intraretinal fluid (red curve) and subretinal fluid (yellow curve) volume trajectories from Notal OCT Analyzer (NOA) segmentation of daily home OCT self-images of a patient’s right and left eye. Vertical dashed lines indicate the dates of intravitreal injections. Retinal fluid exposure described by the area under the curve (AUC) between treatments differs significantly between eyes, despite similar fluid volumes measured on the day of office treatment visits, illustrating the medical insights gained from daily OCT imaging at home. Minimum (Vmin) and maximum (Vmax) fluid volume, as well as inclining (si) and declining (sd) slope of fluid volume trajectories, describe disease activity and treatment response, in some cases broken up in distinct phases of fluid volume increase (si,1*, si,1**) during a treatment cycle. |

A 2021 study of the NOA evaluating its performance included eight eyes of four patients (BCVA 20/50).8 Patients performed daily self-imaging for one month and managed to obtain self-imaging 94 percent of the time. Prof. Loewenstein says retinal fluid was found in 93 of the 211 scans: intraretinal and subretinal fluid in 49 and 44 scans, respectively. Mean volume of fluid recurrence detection was 1.6 nL. There was also a 94.7-percent level of agreement between human graders and NOA on fluid status.

Prof. Loewenstein says the at-home device provided insights regarding the diagnosis of macular neovascularization, its classification, localization of retinal fluid in wet AMD, conversion from dry to wet AMD, visual acuity prognosis and retreatment decisions. She says additional steps such as grouping by uniform patient characteristics (same AMD stage and treatment regimen) and robust validation of the algorithm on larger populations and over longer periods of time are needed to improve the AI system.

• Predicting macular thickness from fundus photos. A proof-of-concept study published in 2019 assessed Genentech/Roche’s deep learning model, which could predict key quantitative time-domain OCT measurements related to macular thickness from color fundus photographs.9 The best deep learning model was able to predict central subfield thickness ≥250 µm and central foveal thickness ≥ 250 µm with an AUC of 0.97 and 0.91 (95% CI), respectively. To predict CST and CFT ≥400 µm, the best deep learning model had an AUC of 0.94 and 0.96, respectively. The researchers say this model could enhance DME diagnosis efficiency in teleophthalmology programs.

• Automated B-scan reading. A preliminary study presented by Zeiss in an ARVO 2021 poster session evaluated an AI-based tool for reading B-scans.10 The researchers trained a deep learning algorithm on 76,544 B-scans of 598 glaucoma patients and 25,600 B-scans of 200 healthy subjects to predict if a given B-scan might be “of interest” based on ground-truth labels from retinal specialists in healthy eyes and eyes with retinal pathologies. The algorithm generated 100-percent and 79-percent agreement in healthy and glaucomatous eyes, respectively. Four cases of disagreement resulted from unusual retinal curvature, unusual contrast in the vitreous and other false positives with inference scores near the algorithm cut-off.

• Expert-level 3D diagnostic scan reading. In 2014 Google acquired DeepMind, one of the world’s leading AI companies. Varun Gulshan, PhD, and his colleagues at Google put the Inception-v3 convolutional neural network proposed by AI researcher Christian Szegedy, PhD, and colleagues to work on the EyePACS-1 dataset (n=9,963 images) of routine DR screening images in the United States and India, and the Messidor-2 dataset (n=1,748 images). In the paper, published in JAMA in 2016, the referable DR algorithm had an AUC of 0.991 for EyePACS-1 and 0.99 for Messidor-2. In 2019 they announced real-world clinical use of the CE-marked algorithm at Aravind Eye Hospital in Madurai, India.

Google DeepMind is working with Moorfields Eye Hospital to develop algorithms for early DR and AMD detection. In 2016, the group signed a formal research collaboration agreement to share anonymized historical OCT scans. Their proof-of-concept study was published in Nature Medicine in 2018, which demonstrated expert-level performance with 3D diagnostic scans for the first time.11

Google DeepMind also improved AI interpretability, part of a problem known as the “black box.” This is often encountered in “unsupervised” approaches. “The AI makes a prediction, but we don’t always know why,” says Dr. Sadda. “A classic example of this in AI is an algorithm that predicted dog breeds. It identified certain dogs as being Alaskan huskies, but it was making the prediction not because of specific dog features but because of the background—it always saw huskies in the snow. It got the right answer for the wrong reasons.”

Susan Ruyu Qi, MD, an ophthalmology resident and Masters student in clinical informatics management at Stanford, explains that Google approached the black box problem using a two-step process of segmentation and classification instead of training a single neural network to identify pathologies from images.12 It has since undergone adjudication improvements with regard to grading.13 Google also collaborated with Optos in 2017 to develop early-detection algorithms for diabetic eye disease, likely making use of Optos’ ultrawide-field fundus imaging.

• Predicting disease progression. AI is predicted to play a major role in the fast-approaching era of personalized medicine and individualized patient care. At UCLA, Dr. Sadda and his colleagues are working on ways to predict patient outcomes using AI. “We’ve developed algorithms that can predict development and progression of atrophy in AMD,” he says. “We’ve also developed a similar algorithm for predicting progression in Stargardt’s disease, which is the biggest cause of juvenile macular degeneration.”

• Uncovering new biomarkers. “In our group, we’re particularly interested in how we can automatically detect features we believe are associated with higher risk for disease progression,” Dr. Sadda continues. “We even take it a step further and let the technology make a prediction based on its own assessment so we can reverse engineer its decision and learn about predictive features we haven’t previously understood. Some call this ‘unsupervised classification,’ and it may open up a space where AI can help us gain new insight into disease mechanisms and pathology.”

|

| A patient undergoes screening for diabetic retinopathy with the IDx-DR, which is intended for use mainly in primary care settings. |

Algorithm Imperfection

Even though these algorithms will be rigorously tested against a large dataset that’s hopefully representative, the question always remains: will it work for the specific patient in front of you? “It might not,” says Dr. Sadda. “All algorithms will have a particular sensitivity and specificity, and we’ll still have to use our own clinical judgment. These devices will likely be employed as physician assistance devices initially. They’re not perfect.”

“With any disease detection system, we have to think carefully about the population on which the systems were developed and validated, and then in what population the system is going to be deployed,” says Dr. Wang. “It may not be reasonable to expect that a system developed in the United States should work just as well in Mumbai or Johannesburg, and vice versa, as the underlying patient population and disease characteristics could be quite different. If one purported goal of AI is to bring ‘personalized medicine’ to patients, it may be entirely appropriate, and indeed desirable, to have AI algorithms that work well for the specific population that they are going to be deployed in, rather than trying to aim for an elusive goal of ‘one system that works for the whole world.’ ”

Practical Use Questions

It’s important to remember that disease detection and screening isn’t an end in itself. “We’ll have to think carefully about what we’re planning to do to help patients who screen positive,” says Dr. Wang. “Is there an intervention that could help improve their outcomes? Are they able to access this intervention? Is the infrastructure set up to facilitate follow-up?”

Highlights from the 2021 Ophthalmic AI Summit • AI/Reader Alignment. “Ursula Schmidt-Erfurth, MD, demonstrated in her talk on geographic atrophy and AI that there’s a disconnect between the way we interpret images and the way an AI platform interprets images. There’s a higher degree of alignment between the AI and true outcomes versus grader reading, which is important to keep in mind.”1 She noted that deep learning can empower experts to understand images better. She uses the Vienna Fluid monitor algorithm in her macular clinic to manage nAMD patients. • EHR Data and Glaucoma. “Sally L. Baxter, MD, MSc, pointed out in her talk on predictive modeling of glaucoma progression using EHR data that AI has huge potential in glaucoma too. We’re heavily focused on retina right now, but data interpretation and analysis will also be incredibly important to show disease progression and changes over time in glaucoma.2 • Telemedicine. “David Myung, MD, discussed the impact of teleophthalmology and AI on diabetic eye exam adherence—particularly low-cost reading methods in public health forums and how that will benefit patients.”3 In January 2021, the 92229 CPT code for AI exams went into effect. —CL 1. Schmidt-Erfurth U. GA diagnosis and therapy by AI. Presented at the Ophthalmic AI Summit, June 2021. 2. Baxter SL. AI and predictive modeling of glaucoma progression using EHR data from the NIH All of Us Research Program. Presented at the Ophthalmic AI Summit, June 2021. 3. Myung D. Impact of teleophthalmology and AI on diabetic eye exam adherence in Bay Area primary care clinics during COVID-19. Presented at the Ophthalmic AI Summit, June 2021. |

Here are some other questions to consider:

• Can you use it? Dr. Singh says that providers will need to understand how the algorithms work. “We have to be able to explain and understand the algorithms, comprehend outcomes and potentially troubleshoot if there’s a problem,” he says. “They can’t be ‘black box’ algorithms. What will you do if the data isn’t showing up? Are you able to support the software? Do you understand the AI might require significant server space or integration with a camera? For health-care organizations, they’ll also need to understand the financial costs and potential benefits associated with AI and then decide whether they want to be involved with such a device.”

• How will you choose among the AI technologies? With so many options in development by different companies, how will physicians and health-care organizations be able to make the most educated choices? Companies will present their clinical trial data, but how can you compare the numbers from one study to the next? The first set of international standards for reporting of clinical trials for AI was released in September 2020.14 The reporting guidelines expand upon the SPIRIT 2013 and CONSORT 2010 frameworks to boost robustness and transparency.15,16 “We hope that eventually there will be universal standard test decks from the FDA or other regulatory bodies that new algorithms can be tested against,” says Dr. Sadda.

• How will software updates be regulated to protect patients? “Another important thing to consider is that these algorithms continue to improve as they learn, so the version of the algorithm you’re using may be very important,” he continues. “This will also be a challenge for the FDA and regulatory bodies. Will they need to pre-approve every little change in the software? We’re used to a new device that gets approved and doesn’t change for some time until the next generation. But AI is very incremental and undergoes continuous improvement. How do you regulate this so that it protects patients? This will be a major challenge in the future.”

On the Same Page?

AI referral patterns are fairly straightforward—if a patient has any amount of potential DR, they’re usually referred to an ophthalmologist or retinal specialist. However, this may result in too many referrals of patients who could otherwise be managed by optometrists or wait a year for another retinal exam. “The issue with these platforms is that it’s not as simple as saying ‘always refer the patient,’ ” says Dr. Singh, who is a member of the ASRS Artificial Intelligence Task Force. “Many times the AAO and AMA recommend annual referral. If the patient has only a small amount of retinopathy they may not necessarily need to do anything at that time.”

That’s where the disconnect is, he says. “I’ve talked to the AAO and ASRS about setting up more standards for working with AI platforms, to ensure these platforms understand the referral patterns the organizations follow. A lot of these platforms don’t necessarily involve the Academy or ASRS in their development or strategic planning, so as a result, recommendations come out of the machine that aren’t aligned with what we’re doing. We have the opportunity in AAO and ASRS to work with AI platforms and develop better focus on referral patterns and interpretation. We want to ensure the AI reflects what we say in clinical practice too. They need clinical validation or alignment with what we do in practice.”

Dr. Sadda says it’s important that ophthalmologists take an active role in shaping how AI technology becomes part of the field. “We need to have some control over how these technologies are deployed,” he says. “We need to make sure they’re safe and provide the best care for our patients, whatever the proposed application is. Ophthalmologists must be proactive.”

Dr. Sadda has NEI/NIH grants with Eyenuk. He is a consultant for Optos, Heidelberg Engineering and Centervue and receives research imaging instruments from Optos, Heidelberg Engineering, Centervue, Nidek, Topcon and Carl Zeiss Meditec. Prof. Loewenstein receives grants from Roche and Novartis and is a consultant for Allergan, Bayer, WebMD, NotalVision and Beyeonics. Dr. Singh is a consultant for Novartis, Genentech, Regeneron, Bausch + Lomb, Gyroscope and Asclepix. He also receives research support from NGM Biopharmaceuticals. Dr. Wang has no relevant commercial financial disclosures.

1. Abràmoff MD, Lavin PT, Birch M, et al. Pivotal trial of an autonomous AI-based diagnostic system for detection of diabetic retinopathy in primary care offices. Nature Digit Med 2018;1:39.

2. Lim J, Bhaskaranand M, Ramachandra C, Bhat S, Solanki K, and Sadda S. Artificial intelligence screening for diabetic retinopathy: Analysis from a pivotal multi-center prospective clinical trial. Presented at ARVO Imaging in the Eye Conference 2019. Vancouver, BC, Canada, 2019.

3. Wang S, Tseng B, Hernandez-Boussard T. Development and evaluation of novel ophthalmology domain-specific neural word embeddings to predict visual prognosis. Int J Med Informatics 2021;150:104464.

4. von der Burchard C, Moltmann M, Tode J, et al. Self-examination low-cost full-field OCT (SELFF-OCT) for patients with various macular diseases. Graefes Arch Clin Exp Ophthalmol 2020;259:1503-11.

5. Maloca P, Hasler PW, Barthelmes D, et al. Safety and feasibility of a novel sparse optical coherence tomography device for patient-delivered retina home monitoring. Transl Vis Sci Technol. 2018;7:4:8.

6. Nahen K, Benyamini G, Loewenstein A. Evaluation of a self-imaging SD-OCT system for remote monitoring of patients with neovascular age related macular degeneration. Klin Monbl Augenheilkd 2020;237:12:1410-1418.

7. Keenan TDL, Goldstein M, Goldenberg D, Zur D, Shulman S, Loewenstein A. Prospective longitudinal pilot study: Daily self-imaging with patient-operated home OCT in neovascular age-related macular degeneration. Ophthalmol Sci 2021. (In press).

8. Loewenstein A, Goldberg D, Zur D, et al. Artificial intelligence algorithm for retinal fluid volume quantification from self-imaging with a home OCT system. Invest Ophthalmol Vis Sci 2021;62:351. ARVO Abstract, June 2021.

9. Arcadu F, Benmansour F, Maunz A, et al. Deep learning predicts OCT measures of diabetic macular thickening from color fundus photographs. Invest Ophthalmol Vis Sci 2019;60:852-57.

10. Lee G, Makedonsky K, Tracewell L, et al. Evaluation of an OCT B-scan of interest tool in glaucomatous eyes. Abstract presented at ARVO 2021. https://www.zeiss.com/content/dam/med/ref_international/events/arvo-2021/arvo-posters-and-presentations/lee_arvo_2021_bscan_glaucoma_vs_healthy_comparison_poster.pdf.

11. De Fauw J, Ledsam JR, Ronneberger O, et al. Clinical applicable deep learning for diagnosis and referral in retinal disease. Nature Med 2018;24:1342-50.

12. Qi SR. Google DeepMind—Improving the interpretability of “Black Box” in medical imaging. https://medium.com/health-ai/google-deepmind-might-have-just-solved-the-black-box-problem-in-medical-ai-3ed8bc21f636.

13. Krause J, Gulshan V, Rahimy E, et al. Grader variability and the importance of reference standards for evaluating machine learning models for diabetic retinopathy. Ophthalmology 2021;125:8:1264-72.

14. Patients set to benefit from new guidelines on artificial intelligence health solutions. University of Birmingham, UK. Press Release: September 9, 2020.

15. Liu X, Rivera SC, Moher D, et al. Reporting guidelines for clinical trial reports for interventions involving artificial intelligence: The CONSORT AI-extension. Nature Med 2020;26:1364-74.

16. Rivera SC, Liu X, Chan A, et al. Guidelines for clinical trial protocols for interventions involving artificial intelligence: The SPIRIT-AI extension. Nature Med 2020;26:1351-63.