There are numerous artificial intelligence algorithms in the works for glaucoma, from disease detection tools to progression forecasting models. Experts say it will take considerable effort to introduce advanced AI models into the glaucoma clinic, but they agree that AI’s potential for improving patient care and addressing inequalities is very real.

In this article, AI innovators and experts discuss the obstacles to AI development for glaucoma, current research and what’s on the clinical horizon.

A Matter of Consensus

|

| Artificial intelligence will help clinicians make better use of the available diagnostic information and tests, experts say. Photo: Getty Images. |

There are certain challenges related to glaucoma that can make it particularly difficult for AI to diagnose and detect. “When you see signs of diabetic retinopathy in a fundus photograph, there’s hardly any question about whether the patient has it or not,” says Felipe Medeiros, MD, PhD, the Joseph A.C. Wadsworth Distinguished Professor of Ophthalmology, vice chair for technology and professor of biostatistics and bioinformatics at Duke University School of Medicine. “Human grading of fundus photographs can serve as a reliable gold standard for DR diagnosis. This makes it easier to develop AI models that can be trained to recognize diabetic retinopathy on a photograph, but glaucoma isn’t quite like that. It may be a tricky disease to diagnose in the early stages.”

Glaucoma is characterized by retinal ganglion cell death, axon death and subsequent excavation of the neuroretinal rim.1 However, optic nerve head size varies greatly among individuals, ranging from 2.10 mm to 2.35 mm,2 which makes disease detection tricky.3 Disc size also varies by age and ethnicity.

“If you show an optic nerve image to different experts, it’s likely that they’ll disagree whether it’s glaucomatous or not, especially in early stages of the disease,” he continues. “Graders tend to over- or underestimate glaucomatous damage and have low grading reproducibility and poor agreement.4 Therefore, training AI models to predict subjective gradings is problematic.”

In the case of fundus photo analysis, if images are deemed ungradable, i.e., the expert readers couldn’t come to a consensus, then those images are usually excluded from the training sets, points out Sophia Ying Wang, MD, MS, an assistant professor of ophthalmology and a glaucoma specialist at Stanford University. “This may hinder the algorithm’s ability to recognize glaucoma in real-world datasets,” she says. In fact, a study using visual field data found that including the unreliable visual fields improved the algorithm’s predictive performance of future visual field mean deviation.5

Experts say glaucoma’s lack of consistent objective diagnostic criteria may explain why AI in glaucoma hasn’t achieved as widespread an application as AI in retina or cornea. This problem has generated questions about how best to diagnose and detect glaucoma using AI and how to train these AI models.

Since there’s no consensus definition of glaucoma yet, AI investigators devise their own definitions to classify disease into categories such as “suspect,” “certain,” “referable glaucomatous optic neuropathy,” “probable” or “definite.”6 Dr. Wang points out that using binary definitions of glaucoma such as “probable/definite” may catch advanced cases but limits the detection of early cases, while terms such as “referable” may be useful when there are too many false positives. “Multiple groups attempt to predict similar outcomes in different ways, and this reduces the ability to compare performance between studies,” she says.

A large, international, crowdsourced glaucoma study on patient data and grading is currently under way, led by researchers at Dalhousie University in Nova Scotia. The Crowd-Sourced Glaucoma Study aims to identify objective criteria on visual fields and OCT that match glaucoma specialists’ assessments of disease likelihood.7 The study spans 15 countries from five continents. Dr. Wang participated as one of the expert graders. “It’s a very exciting way to both collect glaucoma patient data and collect grader ratings to assess inter-rater reliability,” she says.

“In addition to a clinical definition, we also need a so-called ‘computable’ definition, using the data elements that we commonly have in the datasets that we use for AI studies, such as EHRs,” Dr. Wang says. “This is so that AI algorithms can be compared with each other, or even validated in different datasets. Standardizing and harmonizing definitions of glaucoma is especially tricky as available data elements from health records can vary in different settings and studies. AI work goes hand-in-hand with developing data standards.”

Consensus-defined glaucoma will be necessary to confront the growing prevalence of the disease and the need for large-scale screening. Dr. Medeiros says an effective screening model for glaucoma must be inexpensive, widely available, highly accurate and easy to administer.

The imaging systems required for glaucoma detection are expensive, and trained technicians are needed to operate them. Most community screening locales, such as primary care offices and community centers, don’t have such devices. While there are less expensive ways of imaging patients, such as with smartphone or other handheld retinal cameras, these modalities aren’t as powerful as tabletop systems. Additionally, Louis R. Pasquale, MD, a professor of ophthalmology at the Icahn School of Medicine and in practice at the New York Eye & Ear Infirmary of Mount Sinai, notes that the variability in quality of different fundus cameras, from tabletop devices to smartphone cameras, is going to affect an algorithm’s generalizability.

Different devices also can’t use the same algorithm. “Just as the results from one OCT machine can’t be directly compared to those of another, an AI model trained on a Spectralis machine might not perform the same way in a Cirrus,” Dr. Pasquale says.

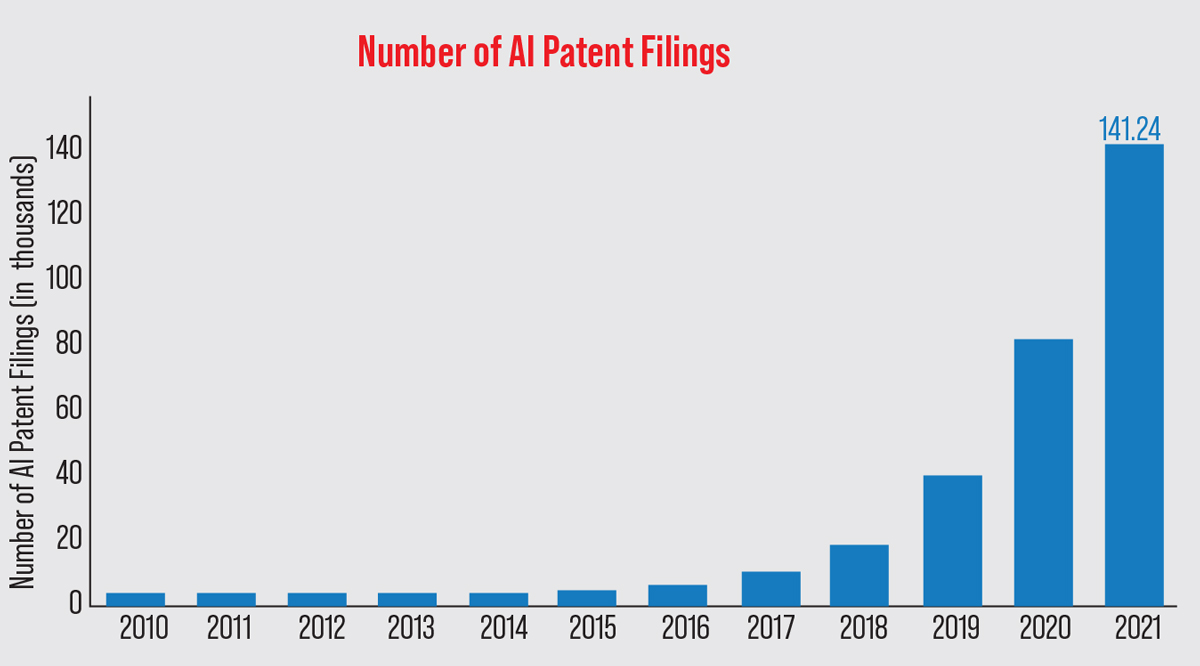

|

| Artificial intelligence research has boomed in the past few years. Stanford University’s AI Index Report revealed that in 2021, the number of AI patents filed globally was 30 times higher than in 2015. East Asia and the Pacific filed 62.1 percent of all patent applications, followed by North America (17.07 percent) and Europe and Central Asia (4.16 percent).20 (Data from The Center for Security and Emerging Technology 2022 AI Index Report.) Photo: Stanford 2022 AI Index Report. |

Data Availability

Large amounts of data and centralized data registries will be indispensable for training and validating algorithms, experts say.6 “You can’t train an AI algorithm with only 100 patients,” Dr. Pasquale says. “Once you get an algorithm that works, then you need to ensure it doesn’t go off the rails and become incorrect over time. You need large datasets and constant surveillance to maintain the functionality of an AI algorithm.”

In addition to the fact that large, centralized datasets aren’t widely available yet, sharing the existing data isn’t easy either because of institutions’ differing data privacy policies and the confidential nature of health-care data in general. “As we build more generalizable algorithms, we need to improve the diversity of our training data and validate algorithms externally,” Dr. Wang says. “It’s difficult to share health data among sites. Right now, there’s a lot of work developing training methods that allow algorithms to be trained on different sites’ data but without exchanging the data. This is called federated learning. It can help to address issues of data privacy, security and access.”

Generalizability

It’s important to have a good understanding of how AI models are developed in order to understand their limitations, says Dr. Medeiros. “Before clinicians use, say, a prediction model, they should understand how the algorithm was developed, the population it was trained on and that population’s characteristics,” he says. “It’s really no different than any medical test. When you’re using a medical test in your clinical practice, such as an OCT or visual field, you need to know the test’s properties and the characteristics of the normative database so you can rely on the results and determine whether they’re applicable to your patient.”

Algorithms trained on one kind of patient aren’t necessarily generalizable to other kinds of patients. For instance, an algorithm trained on a Chinese population might contain a large number of high myopes and therefore be unsuitable for use in a population with different ocular characteristics.

“Generalizability is important if there’s a discrepancy between the population you wish to deploy your algorithm on and the population you trained your algorithm on,” Dr. Wang says. “As an aside, one could argue that not all AI algorithms have to be universally generalizable if you were planning to train and deploy locally in your own unique population. In fact, it’s much harder to develop one algorithm to rule them all, and I don’t think that’s necessarily a goal we want to have, especially as we enter the era of precision medicine.

“We have to be really careful when we train models to ensure we aren’t training them on inherently biased data and perpetuating that bias,” she continues. “For instance, if we try to predict how sick someone is based on how many medical claims they have, this number may be different among minorities for reasons such as unequal access to care.

“As we enter the deployment phase, we also need to be aware of any differences between the training population and the intended patients. If the algorithm’s performance isn’t as good in a certain subgroup of patients, it will negatively impact that group and refer them for treatment inappropriately.”

Current Research

In traditional studies, researchers attempt to arrive at some kind of scientific truth. In AI studies looking at prediction or classification, the algorithm’s prediction performance is paramount, notes Dr. Wang.

There are numerous AI studies in the glaucoma space right now, with algorithms for several applications, from detecting structural changes in the eye to predicting which patients might rapidly worsen. Here’s some of the current and ongoing research:

• Using fundus photos to identify glaucomatous eyes. In 2017, researchers trained a deep learning system to evaluate glaucoma using 125,189 retinal images.8 The algorithm’s performance was validated on 71,896 images. It reported a prevalence of 0.1 percent, with an area under the curve for possible glaucoma of 0.942 (95% CI, 0.929 to 0.954 percent), a sensitivity of 96.4 percent (95% CI, 81.7 to 99.9 percent), and a specificity of 87.2 percent (95% CI, 86.8 to 87.5 percent).

A deep learning study published in 2018 for detecting glaucomatous optic neuropathy from color fundus photographs demonstrated 95.6-percent sensitivity and 92-percent specificity to detect “referable” GON.9 More than 48,000 photos were graded for GON by ophthalmologists in the study. The researchers noted that high myopia caused false negatives and physiologic cupping caused false positives.

Another deep learning study for detecting GON using Pegasus, a free AI system that’s available in the Orbis Cybersight Consult Platform, reported that the algorithm outperformed five out of six ophthalmologists in diagnosis, with an area under the curve of 92.6 percent vs. ophthalmologists’ 69.6 to 84.9 percent.10 The best-case consensus scenario area under the curve was 89.1 percent. The algorithm’s sensitivity was 83.7 percent and the specificity was 88.2 percent, compared with 61.3 to 81.6 percent sensitivity and 90 to 94 percent specificity for ophthalmologists (intraobserver agreement 0.62 to 0.97 vs. 1.00 for Pegasus). The correlation between observations and predictions was 0.88 (p<0.001; MAE: 27.8 µm). The researchers noted the algorithm could determine classification in 10 percent of the time it took ophthalmologists. They suggested the tool could be valuable for screening patients.

• Predicting OCT metrics from fundus photos. Dr. Medeiros’ group has developed a machine-to-machine AI model that was able to objectively predict complex OCT metrics such as nerve tissue thickness from a fundus photograph to be used for glaucoma diagnosis. Machine-to-machine learning enables devices to exchange data without requiring human input for network training.

On a test set of 6,292 pairs of fundus photos and OCTs, the mean predicted RNFL thickness was 83.3 ±14.5 µm and the mean observed RNFL thickness was 82.5 ±16.8 µm (p=0.164) with strong correlation between the two (r=0.832; p<0.001).11

Fundus photography has been an underused resource in the OCT-heavy glaucoma subspecialty, experts point out, but AI is beginning to change that. “The algorithm is quite powerful, and we’ve shown it’s able to detect disease and predict damage and progression over time,” Dr. Medeiros says. “From a disease-detection standpoint, using a low-cost imaging method such as photography could benefit patients in locations where there’s less access to care.”

• Incorporating multiple modalities. Dr. Medeiros says his group is also working on integrating multiple imaging modalities and using AI to recognize patterns of damage on OCT instead of relying on summary parameters to diagnose glaucoma.

“There’s much more to an OCT image than the summary parameters,” he continues. “AI models that have been developed and ones we’ve published on are able to perform a much more comprehensive evaluation of the image for glaucoma assessment and diagnosis, as well as recognize artifacts and segmentation errors. This is very important because OCT is only as good as the scan quality. Incorporating AI models into the current software will help flag artifacts and errors with greater accuracy, and technicians acquiring scans could re-scan right away.”

• Assessing the optic disc. In a cross-sectional study on quantifying neuroretinal rim loss, 9,282 pairs of optic disc photographs and SD-OCT optic nerve head scans from 927 eyes were used to train and validate a deep-learning convolutional neural network to predict BMO-MRW global and sectoral values.12 The algorithm could quantify the amount of neuroretinal damage on the photographs with high accuracy using the BMO-MRW as a reference, Dr. Medeiros’ group reported.

• Mapping structure to function. Using SD-OCT to image the RNFL provides more data than red-free RNFL photographs, researchers noted in a 2020 TVST study.13 They used a convolutional neural network trained to predict SAP sensitivity thresholds from peripapillary SD-OCT RNFL thickness in glaucoma patients to generate topographical information about the structure-function relationship from simulated RNFL defects. They reported the AI-generated map provides “insights into the functional impact of RNFL defects of varying location and depth on OCT.”

• Creating clinical forecasting tools. “Currently, we arbitrarily pick a target intraocular pressure based on patient age, how much damage is present, and the IOP level associated with the damage,” Dr. Pasquale says. “But if the patient were identified as a red flag for fast progression by a validated artificial intelligence algorithm, we might choose a lower target IOP to prevent significant vision loss.

“Currently there’s no such algorithm [in the clinic] because it takes considerable time and effort to build; however, I’m very hopeful and excited that those algorithms will be available in the future.”

Developing a clinical forecasting tool might involve training an algorithm on patient data such as optic nerve photos, visual fields and OCTs at baseline and follow-up. Such an algorithm might then be able to predict from baseline images whether a given patient will be a fast or slow progressor, for example. In one study using 14,034 scans of 816 eyes followed over time and labeled as progression or stable by experts, researchers trained a deep learning model to detect glaucoma progression on SD-OCT. The area under the curve was 0.935, and the researchers reported that the model performed well, closely replicating expert human grading.14

“Many glaucoma patients are stable and are followed over time by ophthalmologists, but about five to 10 percent of patients ‘fall off the cliff,’ ” says Jithin Yohannan, MD, MPH, an assistant professor of ophthalmology at Wilmer Eye Institute, Johns Hopkins University School of Medicine. “Using AI to identify those patients would be incredibly useful, because these are the patients who’d benefit most from closer follow-up and possibly early or more aggressive therapy.” His group has trained AI models that use a patient’s initial visual field, OCT and clinical information to forecast their risk of rapid worsening or surgery for uncontrolled glaucoma.

“In the future, models such as these might serve as a flagging system of sorts, letting the clinician know they might want to pay extra attention to a particular patient,” he says. “Comprehensive ophthalmologists or optometrists could learn sooner which patients require referral to glaucoma specialists.”

• Tools to detect ongoing worsening. Detecting early visual field loss may become easier with AI. One study in 2013 using an artificial neural network to assess visual fields for glaucoma diagnosis reported 93 percent sensitivity, 91 percent specificity and diagnostic performance that was at least as good as clinicians.15 An unsupervised model for analyzing visual fields was able to identify clinically relevant loss patterns and assign weighted coefficients for each.16

Another study of 2,085 eyes’ visual fields used machine-learning analysis to consistently detect progressing eyes earlier than global-, region- and point-wise indices.17 The time to detect progression in a quarter of the eyes using global mean deviation was 5.2 years; 4.5 years using region-wise; 3.9 years using point-wise and 3.5 years using machine learning analysis. After two additional visits, the time until a quarter of eyes demonstrated subsequently confirmed progression was 6.6 years global-wise, 5.7 years region-wise, 5.6 years point-wise and 5.1 years using machine learning analysis.

Dr. Yohannan’s group has trained models that detect visual field worsening on a series of visual fields over time. Because there’s no gold standard definition of what visual field worsening is, the model his group developed uses a consensus of many previously used algorithms to label the eye as worsening or not worsening.

“When we compared the model to clinicians routinely seeing patients in the office, our model performed better than clinicians in our specific dataset,” he continues. “Now, our group is using Wilmer patient EHR data, digital field information and optic nerve images to develop AI algorithms that can detect glaucoma worsening more quickly and accurately and then predict which eyes or patients are at high risk for future worsening. Our goal is to follow those eyes or patients more closely, compared to the average patient who walks into the clinic.”

• Identifying patients for clinical trials. Dr. Yohannan says his group is also working on using AI to improve clinical trials in glaucoma. “Clinical trials, particularly of neuroprotective agents, require large sample sizes,” he explains. “If you’re able to identify eyes that are high risk for getting worse you can recruit those patients into your clinical trial, and that can actually reduce your sample size requirements. This would make it more cost-effective to do some of these studies.”

Studies reviewing AI in clinical trials have also noted that better patient selection could reduce harmful treatment side effects.18 Additionally, researchers note that using AI in patient selection could reduce population heterogeneity by harmonizing large amounts of EHR data, selecting patients who are more likely to have a measurable clinical endpoint and identifying those who are more likely to respond to treatment.19

This AI-based recruitment would work by analyzing EHRs, doctors’ notes, data from wearable devices and social media accounts to identify subgroups of individuals who meet study inclusion criteria and by helping to spread the word about trials to potential participants. However, this type of implementation still faces data privacy and machine interoperability hurdles. Experts note that final decisions about trial inclusion will still rest with humans.

• Tools to reduce the burden of glaucoma testing. “Visual field testing is time-consuming and requires significant patient cooperation,” Dr. Yohannan says. “OCT is quicker and more reliable, and if we’re able to detect significant functional worsening (i.e., visual field worsening) using OCT data that could reduce the need for visual field testing. Our group has shown that we can detect VF worsening with OCT data in a subset of patients. This may greatly reduce the need for VF testing in the future.”

• Forecasting from clinical notes. “My group is very interested in leveraging the richness of the information captured by physicians in free-text clinical progress notes to augment and improve our AI prediction algorithms,” Dr. Wang says. “We adapt natural language processing techniques to work for our specialized ophthalmology language and combine it with other structured clinical information from EHRs to predict glaucoma progression.”

Her group maps words onto numbers so they’re computable by the algorithm. “It’s a technique that takes English language words and turns them into vectors and vector space, so the computer can discern the meaning of the words based on other words close by in the speech or text. We’ve adapted this to work for ophthalmological words. We’re using this to predict whose vision will recover and which patients will need glaucoma surgery in the future.”

• Learning about disease pathogenesis. Dr. Pasquale is using AI to research primary open-angle glaucoma pathogenesis. He and his colleagues hypothesize that glaucoma is multiple diseases rather than a single disease. “We’re arguing that different patterns of nerve damage, which are reflected by different patterns of visual field loss, might give us clues as to how to better stratify the disease,” he says.

Using new-onset POAG cases in two large-cohort studies, the Nurses’ Health Study and the Health Professionals’ Follow-up Study, he and his colleagues digitized visual fields and used archetype analysis to objectively quantify the different patterns of visual field loss. The algorithm identified 14 different patterns of loss, four of which were advanced-loss patterns.

“It’s interesting to see that a health professional who has access to health care might present with a very significant amount of advanced loss, but this happens frequently in glaucoma because it’s such an insidious-onset disease,” he notes. “While analyzing potential racial predispositions for different field loss patterns, we found that African heritage was an independent risk factor for advanced-loss patterns. Our long-term goal is to identify environmental, genetic, or metabolomic determinants of the disease subtypes.”

What’s Needed for Clinical Readiness?

It’s going to take a lot of effort to get AI into the clinical decision-making process. “In the clinic, we collect large amounts of data,” Dr. Pasquale says. “In a single visit, we might get a visual field, an OCT and a fundus photograph, and we just don’t have enough time to digest that data, especially as it accumulates over time for a patient. We’re leaving a lot of information on the table in our decision-making process, and even if we spent hours staring at it, we probably couldn’t wrap our heads around all of it. It’d be great if we had an AI algorithm do that for us. The challenge here would be integrating useful glaucoma AI algorithms into the EHR system.”

Getting an approved algorithm into an EHR in the first place will require working closely with industry, Dr. Yohannan points out. “For instance, our EHR (Epic) doesn’t talk to our imaging data management system (Zeiss Forum). We’ll need to come up with an integrated solution so all of these data can be input into the models so the clinician has easy access to an AI risk score.”

It’s a tall order. “It already takes a lot of effort just to make a simple change in an EHR,” Dr. Pasquale says. “Let’s say an approved algorithm is integrated into an EHR system. What will happen is that a fundus photograph or OCT will have to be dipped into a dialog box so it can be analyzed to say whether there’s glaucomatous damage, whether a patient is getting worse, or maybe is a fast progressor. Subjecting clinical data to an AI algorithm takes time and it may hamper clinicians’ ability to see patients. There’s also the feeling among doctors of, ‘I know my patient best and I don’t need a computer to tell me what to do.’ This sentiment, plus the notion that technology is entangling physicians rather than empowering them, is understandable and needs to be addressed as we think about implementing AI into an EHR.”

Next in Line

|

“In the near future, I think it’s likely we’ll see more algorithms combining multiple sources such as OCTA, visual fields and OCT of the optic nerve or macula along with the whole-patient picture from EHRs, including coexisting diseases and demographics,” he continues. “Algorithms such as these could offer recommendations for how frequently a patient needs to be seen, whether a certain patient will do better with drops compared to laser, or whether a certain patient is more likely to miss drops, for instance.

“Now, clinicians wouldn’t use such an algorithm blindly,” he says. “AI is going to be an instrument to help clinicians make better use of the available diagnostic information and tests. Any recommendations will be taken into consideration by the clinician when making decisions.”

Dr. Yohannan hopes to use raw OCT images to obtain more data. “Currently we use mostly numeric information that comes from the OCT machine,” he says. “We’re working on extracting raw images, which contain more information than the numbers. The question then is, is there a way we can actually extract better information from those images and give that to the models we’re creating to forecast visual field worsening or detect worsening with even greater power?”

Merging genomic and clinical data may be another future AI development since genomic data can be an independent predictor of glaucoma with a modest level of success. “We’ve found that having a greater genetic burden for the disease increases the risk of needing filtration surgery,” says Dr. Pasquale. “We’ve identified 127 loci for POAG, and we could create a genetic risk score from that data to predict glaucoma with about 75-percent accuracy. That’s all without considering imaging data. Imagine what’s possible if we merge imaging data with genomic data.

“More hospitals are developing biorepositories of high-throughput genetic data,” he adds. “It’s all research right now and not directly connected to EHRs, but perhaps in 10 years when we have acceptance of genomic markers associated with various common complex diseases, it could be merged with imaging data to create more powerful clinical decision-making algorithms.”

“It’s early to say what might actually be deployed and adopted in a widespread fashion,” says Dr. Wang. “As in every field, there is a large chasm between development of an AI algorithm in code, and actually deploying it in the clinic on patients. It may be that some of the first algorithms to be deployed would be glaucoma screening algorithms, or there may be clinical decision support tools to help clinicians predict a glaucoma patient’s clinical trajectory.”

Dr. Medeiros receives research support from Carl-Zeiss Meditec, Heidelberg Engineering, GmBH, Reichert, Genentech and Google. He’s a consultant for Novartis, Aerie, Allergan, Reichert, Carl-Zeiss, Galimedix, Stuart Therapeutics, Annexon, Ocular Therapeutix and Perceive Biotherapeutics. Dr. Wang and Dr. Yohannan receive funding from the NIH and Research to Prevent Blindness. Dr. Yohannan also receives research support from Genentech and is a consultant for AbbVie, Ivantis and Topcon. Dr. Pasquale receives NIH support for his AI research.

1. Bourne RRA. The optic nerve head in glaucoma. Community Eye Health 2012;25:79-80:55-57.

2. Tekeli O, Savku E, Abdullayev A. Optic disc area in different types of glaucoma. Int J Ophthalml 2016;9:8:1134-1137.

3. Ting DSW, Pasquale LR, Peng L, et al. Artificial intelligence and deep learning in ophthalmology. Br J Ophthalmol 2019;103:2:167-175.

4. O’Neill EC, Gurria LU, Pandav SS, et al. Glaucomatous optic neuropathy evaluation project: Factors associated with underestimation of glaucoma likelihood. JAMA Ophthalmol 2014;132:5:560-566.

5. Villasana GA, Bradley C, Elze T, et al. Improving visual field forecasting by correcting for the effects of poor visual field reliability. Transl Vis Sci Technol 2022;11:5:27.

6. Lee EB, Wang SY, Chang RT. Interpreting deep learning studies in glaucoma: Unresolved challenges. Asia Pac J Ophthalmol (Phila) 2021;10:261-267.

7. Vianna JR, Quach J, Baniak G and Chauhan BC. Characteristics of the Crowd-sourced Glaucoma Study patient database. Invest Ophthalmol Vis Sci 2020;61:7:1989. ARVO Meeting Abstract June 2020.

8. Ting DSW, Cheung CY, Lim G, et al. Development and validation of a deep learning system for diabetic retinopathy and related eye diseases using retinal images from multiethnic populations with diabetes. JAMA 2017;318:22:2211-2223.

9. Li Z, He Y, Keel S, et al. Efficacy of a deep learning system for detecting glaucomatous optic neuropathy based on color fundus photographs. Ophthalmology 2018;125;1199-1206.

10. Al-Aswad L, Kapoor R, Chu CK, et al. Evaluation of a deep learning system for identifying glaucomatous optic neuropathy based on color fundus photographs. J Glaucoma 2019;28:12:1029-1034.

11. Medeiros FA, Jammal AA, Thompson AC. From machine to machine: An OCT-trained deep learning algorithm for objective quantification of glaucomatous damage in fundus photographs. Ophthalmology 2019;126:4:513-521.

12. Thompson AC, Jammal AA, Medeiros FA. A deep learning algorithm to quantify neuroretinal rim loss from optic disc photographs. Am J Ophthalmol 2019;201:9-18.

13. Mariottoni EB, Datta S, Dov D, et al. Artificial intelligence mapping of structure to function in glaucoma. TVST 2020;9:2:19.

14. Mariottoni EB, Datta S, Shigueoka LS, et al. Deep learning assisted detection of glaucoma progression in spectral-domain optical coherence tomography. [In press].

15. Andersson S, Heijl A, Bizios D, Bengtsson B. Comparison of clinicians and an artificial neural network regarding accuracy and certainty in performance of visual field assessment for the diagnosis of glaucoma. Acta Ophthalmol 2013;91:5:413-417.

16. Elze T, Pasquale LR, Shen LQ, et al. Patterns of functional vision loss in glaucoma determined with archetypal analysis. J R Soc Interface 2015;12.

17. Yousefi S, Kiwaki T, Zheng Y, et al. Detection of longitudinal visual field progression in glaucoma using machine learning. Am J Ophthalmol 2018;193:71–9.

18. Cascini F, Beccia F, Causio FA, et al. Scoping review of the current landscape of AI-based applications in clinical trials. Front Public Health. [Epub August 12, 2022]. https://doi.org/10.3389/fpubh.2022.949377.

19. Bhatt A. Artificial intelligence in managing clinical trial design and conduct: Man and machine still on the learning curve? Perspect Clin Res 2021;12:1:1-3.

20. Lynch S. The state of AI in 9 charts. Stanford Institute for Human-centered Artificial Intelligence Annual AI Index Report. Updated March 16, 2022. Accessed October 14, 2022. https://hai.stanford.edu/news/state-ai-9-charts.