|

Growing up I was a huge nerd. Didn’t much like people, still don’t. Just wanted to find a quiet corner and read. And what I read was frequently science fiction. It was modern, it was exciting, had lots of science, and it usually was a far better place to live than the reality around me. OK, that’s not fair. I had a good childhood. Not without its sorrows, but a good one. But not as good as the worlds I lived in in my head. And obviously, especially in the 1960s, these worlds of fiction included robots—all sorts of robots—and computers, mostly depicted as benign and useful helpers to mankind but, on occasion, evil. The grandmaster of science fiction—and robots—was Isaac Asimov. His oeuvre is extensive, and a lot of it involved robots, but not all. The “Foundation” series had not a single robot in it, surprisingly. And his collection of stories which comprised “I, Robot” was obviously all about them (a subset of those stories was made into a movie of the same name starring Will Smith). Asimov really did define robot storytelling and created the concept almost out of whole cloth. This allowed him to define the conversation, most notably with his three laws of robotics:

1. A robot may not injure a human being or, through inaction, allow a human being to come to harm.

2. A robot must obey orders given it by human beings except where such orders would conflict with the First Law.

3. A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

These rules, which humanity imposed on its robots, ensured their safe and helpful nature. But as in so much in life, words are subject to interpretation and the law of unintended consequences trumps all others—robotic or otherwise. Therein lies the basis for so many of his books, and for the consternation we feel now in 2023 as real life starts to approach the science fiction of my childhood.

Well, we don’t yet have fully functioning robots, but in a way not well imagined decades ago, artificial intelligence is as powerful or more so than a shiny metal creature looking at you with glowing eyes. AI doesn’t require a physical form. Software is more powerful than the strongest titanium, and far more insidious. Our devotion to technology and automation has driven science to push us to automate everything we do. To craft machines, virtual or real, that can find, sort, analyze, suggest and implement solutions to almost any problem, without help, supervision, and potentially without restrictions—if we so wish. And here’s the problem: You have a very imperfect species crafting a machine, nay, an intelligence, that’s trying to be perfect. But they will inevitably be only as imperfect as we are.

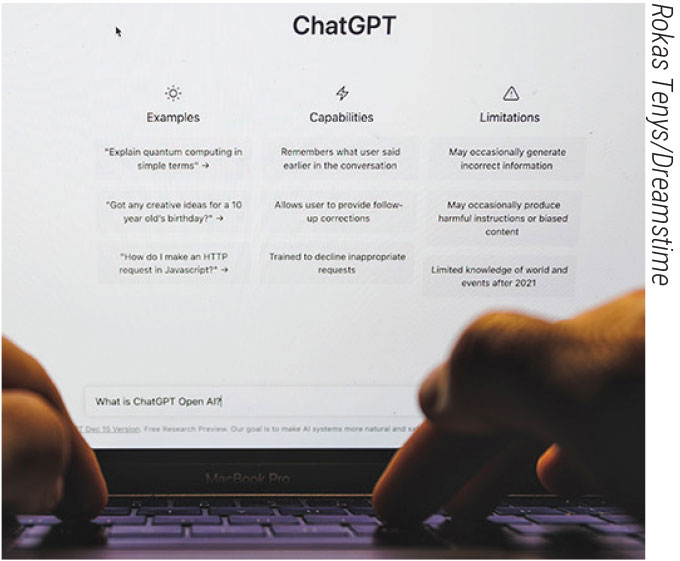

At this point everyone is aware of ChatGPT and other AI programs that go far beyond what their inventors imagined, or maybe they haven’t—yet. Given humans’ insatiable need to craft something in our image, these AIs are more and more frequently indistinguishable from us. Who’s at the other end of that email, Facebook post or tweet? A human or a bot? Who stole your identity, a person or a software program? We’re already in the fog. It’s charming to read these older sci-fi stories where artificial intelligence is mostly physical. Mostly a metal robot, and that the harm from them would be frequently physical. Asimov’s three laws of robotics focused on preventing harm to humans.

To his credit he quickly realized that not all harm is intentional and not all harm is physical. In their desire to protect, robots prevented humans from doing stupid things. However, define “stupid.” Foolish? Suicidal? Risky? Who gets to say what this means? Maybe it’s the AI. And maybe it’s not just individual humans, but humanity. Think big. AIs certainly do and will. So, Asimov amended his three laws and created a law to precede the first, a law that states that “robots may not harm humanity, or through inaction allow humanity to come to harm.”

Substitute AI for robot. What will our new overlords allow us to do individually or as a species? Perhaps they’ll lock us away for our own safety. In the end, the law of unintended consequences will triumph again, even over Asimov’s “zeroth” law.

Dr. Blecher is an attending surgeon at Wills Eye Hospital.